Data Center Networking

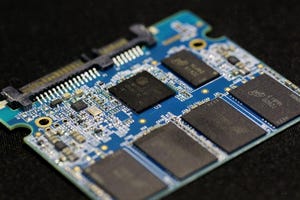

Data center networking is the networking infrastructure and technologies used to interconnect servers, storage systems, and other networking devices within a data center facility.

options like nickel-zinc batteries could allow data centers to operate over a wider temperature range while also offering higher power density.

Network Management

The Sweeping AI Trends Defining the Future Data CenterThe Sweeping AI Trends Defining the Future Data Center

It's clear generative AI will test the limits of data center design. While most of the attention in IT infrastructure falls on advanced processors, data center planners should also consider new cooling methods, such as liquid cooling, and options like nickel-zinc batteries that deliver higher power densities.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox