In Pursuit of 1.6T Data Center Network Speeds

Today’s 400G data centers are not fast enough for many emerging applications. The networking industry is looking toward 1.6T network speeds.

For the last 30 years, modern society has depended on data center networks. Networking speeds must continue to increase to keep up with the demand caused by emerging technologies such as autonomous vehicles (AVs) and artificial intelligence (AI). Further innovation in high-speed data will enable 800 gigabits per second (800G) and 1.6 terabits per second (1.6T) network speeds.

A recent trend is the offloading of data processing to external servers. Hyperscale data centers have popped up globally to support cloud computing networks. Smaller data centers for edge computing have also become more common for time-sensitive applications. Data centers have become “intelligence factories,” providing powerful computing resources for high-demand applications.

Edge computing is compelling for its impact on AVs. Instead of hosting a supercomputer in the car to process the sensor data, AVs use smaller servers on the “edge,” dramatically reducing latency. Cloud computing applications span from controlling factory robots to hosting thousands of VR users in the metaverse. Today’s 400G data centers are not yet fast enough for many emerging applications, so the networking industry is already looking toward 1.6T.

Data Center Network Speeds

Data center network speeds are facilitated by physical layer transceivers, which follow the Institute of Electrical and Electronics Engineers (IEEE) and the Optical Internetworking Forum (OIF) standards. Both industry groups define the interoperability of each interface in the data center. As of this writing, the most current standards are IEEE 802.3ck (networks over 100 Gb/s lanes) and OIF CEI-112G (defining 112 Gb/s speeds).

Innovations and Data Center Challenges for 800G/1.6T

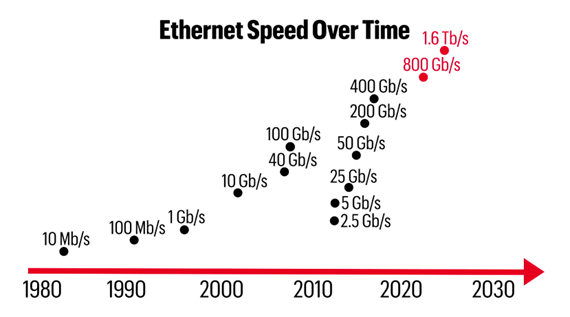

Over the last few decades, Ethernet speeds have grown dramatically, reaching 400 Gb/s over four 56 GBaud (GBd) PAM4 lanes. The industry expects to double the speed twice over the next few years.

DC 1.png

Ethernet speeds over time, starting with the first IEEE 802.3 standard.

The first generation of 800G will likely consist of eight 112 Gb/s lanes with a total aggregate data rate of 800 Gb/s. However, increasing the data rate per lane (from 112 Gb/s to 224 Gb/s) is more efficient. The IEEE and OIF will consider the tradeoffs of each method of implementation when defining the 800G and 1.6T standards.

Below are several challenges and potential solutions for achieving 224 Gb/s lane rates:

Switch Silicon

Networking switch chips enable low-latency switching between elements in the data center. From 2010 to 2022, switch silicon bandwidth rose from 640 Gb/s to 51.2 Tb/s after multiple improvements in complementary metal-oxide semiconductor (CMOS) process technology. The next generation of switch silicon will double the bandwidth once again to 102.4T. These silicon switches will support 800G and 1.6T over 224 Gb/s lanes.

Forward Error Correction

Forward error correction (FEC) refers to transmitting redundant data to help a receiver piece together a signal with corrupted bits. FEC algorithms can recover data frames from random errors but struggle against burst errors when entire frames are lost. Each FEC architecture has tradeoffs and advantages of coding gain, overhead, latency, and power efficiency. In 224 Gb/s systems, more complex FEC algorithms will be necessary to minimize burst errors.

Optical Modules and Power Efficiency

The most difficult challenge facing data centers is power consumption. Optical module power consumption has increased with each generation. As optical module designs mature, they become more efficient, meaning that power consumption per bit is decreasing. However, with an average of 50,000 optical modules in each data center, the modules’ high average power consumption remains a concern. Co-packaged optics can reduce power consumption per module, moving the optoelectronic conversion inside the package, but there are still questions about cooling requirements.

Countdown to 1.6T Data Center Network Speeds

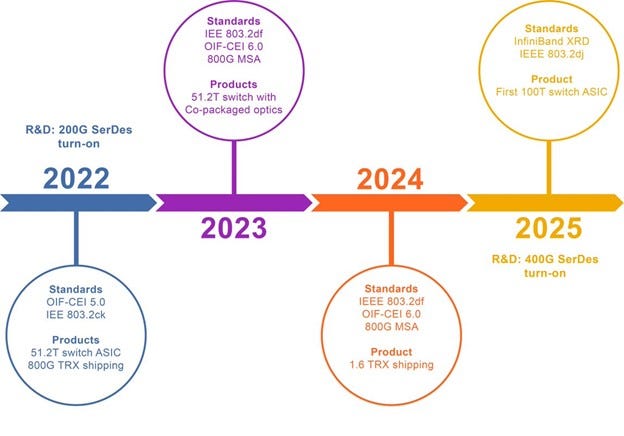

800G is coming, building upon the groundwork the IEEE and OIF set with 400G. The first 51.2T switch silicon was released in 2022, enabling sixty-four 800 Gb/s ports, and validation began on the first 800G transceivers.

This year, the standards organizations will release the first versions of the IEEE 802.3df and OIF 224 Gb/s standards giving developers a better indication of how to build 800G and 1.6T systems using 112 Gb/s and 224 Gb/s lanes. In the next two years, expect the standards organizations to finalize the physical layer standards and real development and validation to begin shortly after.

DC 2.jpg

Projected timeline for 800G and 1.6T developments.

Operators are currently upgrading data centers to 400G networks, but they only buy time until the next inevitable speed upgrade. Data centers will always need more efficient and scalable data technologies to support data-heavy emerging tech.

Ben Miller is Product Marketing Manager at Keysight Technologies.

Related articles:

About the Author

You May Also Like