Data Center Networking

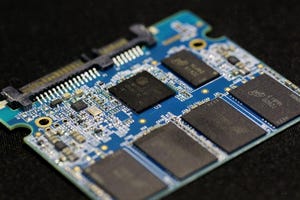

Data center networking is the networking infrastructure and technologies used to interconnect servers, storage systems, and other networking devices within a data center facility.

The integration of DHCP options with ZTP processes represents a significant advancement for network management.

Network Management

Zero-Touch Provisioning for Power Management DeploymentsZero-Touch Provisioning for Power Management Deployments

How to integrate Dynamic Host Configuration Protocol and network interfaces for enhanced automation and efficiency.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox