Data Center Networking

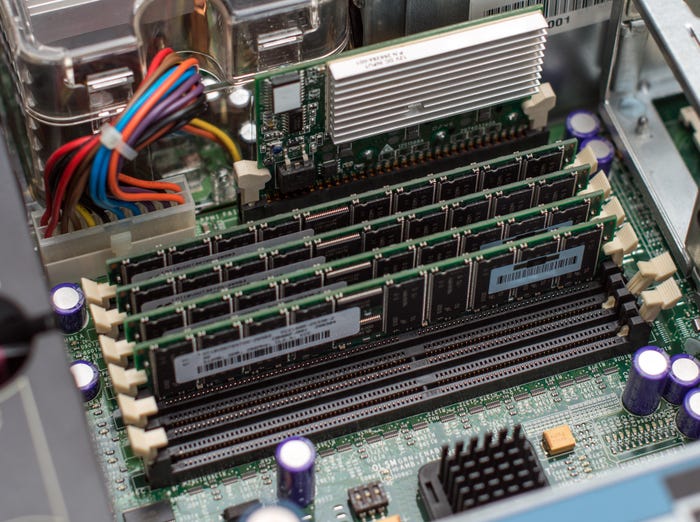

Data center networking is the networking infrastructure and technologies used to interconnect servers, storage systems, and other networking devices within a data center facility.

Enterprises investing in new infrastructure to support AI will have to choose between InfiniBand and Ethernet

Enterprise Connectivity

The Impact of AI on The Ethernet Switch MarketThe Impact of AI on The Ethernet Switch Market

Enterprises investing in new infrastructure to support AI will have to choose which technology is best for their particular needs. InfiniBand and Ethernet will likely continue to coexist for the foreseeable future. However, it’s highly likely that Ethernet will remain dominant in most network environments.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox