Preparing the Network for the Surge in AI Workloads

Now is the time to engage in network planning exercises to address the issues AI workloads and new traffic patterns will have on existing network infrastructures.

September 27, 2024

Network newbies, aficionados, and professionals! Lend me your ear. There are things afoot that will have a profound impact on your stomping ground, the network. There is a ton of focus on generative AI and the applications being built to leverage it. Studies abound about what those applications look like, what frameworks they're using, and what services to use. I have some of that data myself.

But what isn’t being talked about (enough) is the impact these applications are going to have on the backbone of business. You know, the network. It wasn’t surprising to learn that only 50% of respondents to a network-oriented survey said network planning was something their company engaged in.

That’s problematic because there are things afoot in business that indicate data center networks—the ones you operate and oversee daily—are about to get inundated with new workloads that will have great implications on data center networks.

AI Workloads Coming Home

Yes, that's what all that talk about repatriation really means. If workloads are coming home, they need a place to stay and hallways to roam around in the middle of the night. Our own research indicates a significant rise (from 13% in 2021 to 50% in 2024) of organizations that have or plan to repatriate workloads.

And here's the thing: If Barclay’s CIO Survey from the first half of 2024 is correct, the workloads coming home are all about data. Both storage and database workloads were the top workloads moving from the public cloud to a data center near you. Because of the reliance of AI on that data, the return of data-related workloads to the data center is indicative of future AI workloads—in the data center.

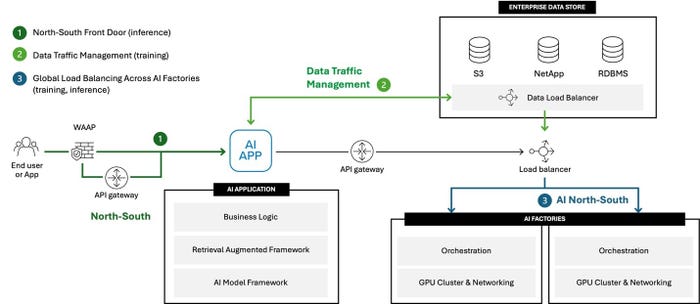

Given the current leading AI application patterns, significant changes to the network in terms of throughput and traffic patterns are needed. This is due to the addition of new 'tiers' in the application architecture that expand to incorporate both data sources and inferencing services and an influx of new traffic.

High-Level View of AI-Supporting Architecture

Which Networking Tech is Best for AI Workloads?

The thing is that some protocols, like Infiniband, have long been the purview of storage professionals. But Infiniband is one of the darling protocols of AI factories because most AI traffic is big chunks of data and, honestly, easily overwhelms Ethernet.

So we’ve got a network that’s now incorporating additional protocols with new paths that reach deeper into the data center. There's more traffic and a greater need to control it for various reasons (like compliance and privacy and stuff).

That whole network planning exercise? Absolutely necessary now. And one of the tasks associated with that exercise should be the identification of strategic control points in that network.

Strategic control points are those locations within the network architecture where services can be inserted to control traffic for purposes of scale and security. And just as important as identifying those control points is recognizing that traffic flows are going to be bi-directional. Plenty of AI applications rely on inferencing services that can call out to a service; services that may reside inside the data center but can also be outside the data center.

That’s a marked shift in architecture that has traditionally assumed inbound (ingress) traffic control is the norm. Some of those control points will require a service capable of managing and securing outbound traffic. And not the ‘URL filtering/web content’ kind of capabilities. This is more along the lines of API security turned inside-out.

A Final Word on Networking and AI Workloads

AI is disruptive in every sense of the word. It’s changing industries, it’s changing jobs, it’s changing expectations, and it’s changing the network. When we talk about digital transformation and the need to modernize enterprise architecture, we call out the importance of its most foundational component: infrastructure. That means the network.

It’s time to engage in some network planning exercises before it's overwhelmed with workloads and new traffic requirements that existing network infrastructure can't handle.

Read more about:

Infrastructure for AIAbout the Author

You May Also Like