Why Large Data Centers Need Overlay Networks

If your data center requires massive scale, then overlay networking makes the most sense, according to this chapter excerpt.

August 21, 2014

For large enterprises, the focus on the network of late has been in the data center. New networking technologies are evolving to address the huge demands of cloud computing, mobility, and big data initiatives. If your organization is using the TRILL or FabricPath protocols, you'll want to read further.

This chapter excerpt, Need for Overlays in Massive-Scale Data Centers, from the book Using TRILL, FabricPath, and VXLAN, by Sanjay K. Hooda, Shyam Kapadia, and Padmanabhan Krishnan, provides a listing of changing data center requirements and the different architectures that are considered to meet these requirements. It describes how data centers have evolved technologically, their major requirements, and popular architectures. It shows how overlays meet these changing needs and requirements.

Data Center Architectures

Today, data centers are composed of physical servers arranged in racks and multiple racks forming a row. A data center may be composed of tens to thousands of rows and thereby composed of several hundred thousand physical servers. The three layers are composed of the following:

Core layer: The high-speed backplane that also serves as the data center edge by which traffic ingresses and egresses out of the data center.

Aggregation layer: Typically provides routing functionality along with services such as firewall, load balancing, and so on.

Access layer: The lowest layer where the servers are physically attached to the switches. Access layers are typically deployed using either an end-of-row (EoR) model or top-of-rack (ToR) model.

Typically the access layer is Layer 2 only with servers in the same subnet using bridging for communication and servers in different subnets using routing via Integrated Bridged and Routing (IRB) interfaces at the aggregation layer. However, Layer 3 ToR designs are becoming more popular because Layer 2 bridging or switching has an inherent limitation of suffering from the ill effects of flooding and broadcasting. For unknown unicasts or broadcasts, a packet is sent to every host within a subnet or VLAN or broadcast domain.

As the number of hosts within a broadcast domain goes up, the negative effects caused by flooding packets because of unknown unicasts, broadcasts (such as ARP requests and DHCP requests) or multicasts (such as IPv6 Neighbor Discovery messages) are more pronounced. As indicated by various studies, this is detrimental to the network operation and limiting the scope of the broadcast domains is extremely important. This has paved the way for Layer 3 ToR-based architectures.

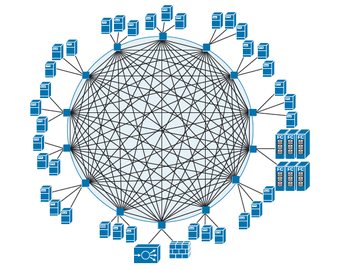

Figure 1:  Single-fabric data center network architecture

Single-fabric data center network architecture

By terminating Layer 3 at the ToR, the sizes of the broadcast domains are reduced, but this comes at the cost of reduction in the mobility domain across which virtual machines (VMs) can be moved, which was one of the primary advantages of having Layer 2 at the access layer. In addition, terminating Layer 3 at the ToR can also result in suboptimal routing because there will be hair-pinning or tromboning of across-subnet traffic taking multiple hops via the data center fabric.

Ideally, what is desired is to have an architecture that has both the benefits of Layer 2 and Layer 3 in that (a) the broadcast domain and floods should be reduced; (b) the flexibility of moving any VM across any access layer switch is retained; and (c) both within and across subnet traffic should still be optimally forwarded (via one-hop) by the data center fabric21; (d) all data center network links must be efficiently utilized for data traffic. Traditional spanning tree-based Layer 2 architectures have given way to Layer 2 multipath-based (L2MP) designs where all the available links are efficiently used for forwarding traffic.

To meet the changing requirements of the evolving data center, numerous designs have been proposed. These designs strive to primarily meet the requirements of scalability, mobility, ease-of-operation, manageability, and increasing utilization of the network switches and links by reducing the number of tiers in the architecture. Over the last few years, a few data center topologies have gained popularity. The following sections briefly highlight some common architectures.

CLOS

CLOS-based architectures have been extremely popular since the advent of high-speed network switches. A multitier CLOS topology has a simple rule that switches at tier x should be connected only to switches at tier x-1 and x+1 but never to other switches at the same tier. This kind of topology provides a large degree of redundancy and thereby offers a good amount of resiliency, fault tolerance, and traffic load sharing. Specifically, the large number of redundant paths between any pair of switches enables efficient utilization of the network resources.

Fat-tree

A special instance of a CLOS topology is called a fat-tree, which can be employed to build a scale-out network architecture by interconnecting commodity Ethernet switches. A big advantage of the fat-tree architecture is that all the switching elements are identical and have cost advantages over other architectures with similar port-density.

Single-fabric

Another popular data center architecture includes ToR switches connected via a single giant fabric as has been productized by Juniper for its QFabric offering. In this simple architecture, only the external access layer switches are visible to the data center operator. The fabric is completely abstracted out, which enables a huge reduction in the amount of cabling required.

Need for Overlays

The previous sections introduced the “new” data center along with its evolving requirements and the different architectures that have been considered to meet these requirements. The data center fabric is expected to further evolve, and its design should be fluid enough in that it can amalgamate in different ways to meet currently unforeseen business needs. Adaptability at a high scale and rapid on-demand provisioning are prime requirements where the network should not be the bottleneck.

Overlay-based architectures 25 provide a level of indirection that enables switch table sizes to not increase in the order of the number of end hosts that are supported by the data center. This applies to switches at both the access and the aggregation tier. Consequently, among other things, they are ideal for addressing the high scale requirement demanded by massive-scale data centers.

>> For more on overlays, read the full chapter here.

>> You can buy the book at the Cisco Press store. Network Computing members using the code NWC2014 receive 35% off through December 31, 2014.

About the Author

You May Also Like

Maximizing cloud potential: Building and operating an effective Cloud Center of Excellence (CCoE)

September 10, 2024Radical Automation of ITSM

September 19, 2024Maximizing Manufacturing Efficiency with Real-Time Production Monitoring

September 25, 2024