VMware vSphere Storage Types

Learn about vSphere storage fundamentals in this excerpt from "Mastering VMware vSphere 6.5."

July 26, 2018

VMware vSphere supports different types of storage architectures, both internally (in this case the controller is crucial, that must be in the HCL) or externally with shared SAS DAS, SAN FC, SAN iSCSI, SAN FCoE, or NFS NAS (in those case the HCL is fundamental for the external storage, the fabric elements, and the host adapters).

For local storage, with vSphere 6.x it's possible to use USB disks, not only as boot disks, but also to run VMs. But note that USB datastores are just unsupported by VMware.

Storage types at the VM logical level

There are different types of virtual disks depending on the provisioning method, pre- allocated or dynamic. The type of virtual disks are mainly the same since vSphere 4.0:

An eager zeroed thick disk has all space allocated and wiped clean of any previous content on the physical media at creation time. Such disks may take a long time during creation compared to other disk formats. The entire disk space is reserved and unavailable for use by other VMs.

Thick or lazy zeroed thick VMDK: A thick disk has all space allocated at creation time. This space may contain stale data on the physical media. Before writing to a new block, a zero has to be written, increasing the input/output operation per second (IOPS) on new blocks compared to eager disks. The entire disk space is reserved and unavailable for use by other VMs.

Thin VMDK: Space required for the thin-provisioned virtual disk is allocated and zeroed on demand as space is used. Unused space is available for use by other VMs.

You can choose the disk provisioning type during virtual disk creation, but you can change the type using a cold VM migration across two datastores, or using Storage vMotion (if you have at least ESXi Standard edition). Note that you can also change the type of each individual disk, by choosing Configure per disk on the new HTML5 client shown as follows:

(Click on image for larger view)

There are also Raw Device Mapping (RDM) disks where a disk at ESXi level is mapped 1:1 to a VM (like a Passthrough mode), with two different types of compatibility (virtual or physical mode). Except for building guest clusters (clusters across VMs on different hosts), there is no need to use these types of disk.

There is no significant difference in performance for sequential I/O between the different types of virtual disks. For random I/O, thin VMDKs have the worst performance and higher latency (for lazy thick, it depends if you have to write a new block).

Storage types at the VM physical level

To access a block device, such as virtual disks VMDK, virtual CD/DVD-ROM, or other SCSI devices, each VM uses storage controllers; at least one is added by default when you create a VM.

There are different types of controller available for a VM running on ESXi which are described as follows:

BusLogic: This is one of the first emulated SCSI virtual controllers available in VMware ESX. Now it's a legacy controller used mainly for legacy operating systems. It does not support VMDK larger than 2 TB.

LSI Logic Parallel: This was formally known as LSI Logic and was the other SCSI virtual controller available originally in VMware ESX, used for operating systems such as Windows Server 2003.

LSI Logic SAS: This was introduced in vSphere 4.0, and is the evolution of the parallel driver, working as a SAS virtual controller and used in Windows Server 2008 or newer.

VMware Paravirtual (or PVSCSI): This was introduced in vSphere 4.0, is an SCSI virtual controller designed to support very high throughput with minimal processing cost, working not in emulation mode, but in paravirtual mode (it requires the VMware Tools to be recognized).

Others virtual controllers are also possible in a VM, such as AHCI SATA (introduced in vSphere 5.5), IDE, and also USB controllers, but usually for specific cases (for example SATA or IDE are usually used for virtual DVD drives).

Note: When you create a VM, the default controller is optimized for good performance and compatibility. The controller type depends on the guest operating system (usually its driver is included in the operating system), the device type, and sometimes, the VMs compatibility. But sometimes you can choose a different controller to improve the performance, like the PVSCI (useful for VMFK with high load) or a new type available in vSphere 6.5.

With ESXi 6.5 and VM virtual hardware version 13, you can now also use a virtual NVMe. Virtual NVMe devices have reduced guest I/O processing overheads (over 50% compared to AHCI SATA SCSI device), which allows more VMs per host or more transactions per minute. Each virtual machine supports 4 NVMe controllers and up to 15 devices per controller.

Virtual NVMe controllers are supported on vSphere 6.5 only on the following guest operating systems:

Windows 7 and 2008 R2 (hotfix required, refer to https://support.microsoft.com/en-us/kb/2990941)

Windows 8.1, 2012 R2, 10, 2016

RHEL, CentOS, NeoKylin 6.5, and later Oracle Linux 6.5 and later

Ubuntu 13.10 and later

SLE 11 SP4 and later

Solaris 11.3 and later

FreeBSD 10.1 and later

Mac OS X 10.10.3 and later

Debian 8.0 and later

You can add a new NVMEe virtual controller using the vSphere Web Client (from the HTML5 web client is not yet possible) as shown in the following steps:

Right-click on the virtual machine in the inventory and select Edit Settings option

Click the Virtual Hardware tab, and select NVMe Controller from the New device drop-down menu

Click on Add

The controller appears in the Virtual Hardware devices list

Click OK

(Click on image for larger view)

For more information on NVMe, see also KB 2147714—Using Virtual NVMe with ESXi 6.5 and virtual machine Hardware Version 13 (https://kb.vmware.com/kb/2147714).

For more information on PVSCI, see also KB 1010398—Configuring disks to use VMware Paravirtual SCSI (PVSCSI) adapters (https://kb.vmware.com/kb/1010398).

Storage types at the ESXi logical level

At the high level, VMware vSphere will access each storage using datastores—a logical paradigm to abstract all storage types, like a common operating system uses letters or mount points to access a filesystem.

VMware vSphere 6.x has the following four main types of datastore:

VMware FileSystem (VMFS) datastores: All block-based storage must be first formatted with VMFS to transform a block service to a file and folder oriented services

Network FileSystem (NFS) datastores: This is for NAS storage

VVol: This is introduced in vSphere 6.0 and is a new paradigm to access SAN and NAS storage in a common way and by better integrating and consuming storage array capabilities

vSAN datastore: If you are using vSAN solution, all your local storage devices could be polled together in a single shared vSAN datastore

New datastores could be provisioned from the new HTML5 client, starting from a data centre, a cluster, or a host; just right-click on the object, choose storage, and then new datastore:

(Click on image for larger view)

For local disks, if you have configured the right RAID level from the controller (remember that ESXi does not provide software RAID features), you can just format the logical disks with a VMFS datastore.

But before external storage, before adding a new datastore, you must first configure the ESXi host, the fabric, (if present) and the storage itself. This depends on the storage type and vendor and will be discussed later. You cannot directly add a vSAN datastore; the vSAN configuration is quite different, but the final result will be a vSAN datastore with its own format.

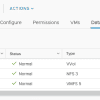

Of course, on the same host you can have multiple datastores, also with different types:

(Click on image for larger view)

At the datastore level, there isn't any difference between DAS or SAN, they are just block- based storage and become VMFS datastores. The functional difference is that a SAN disk could be shared across multiple hosts, not local DAS disks (but there are also shared SAS storages that are formally classified as DAS storage).

Storage types at the ESXi physical level

Excluding vSAN, which has a specific configuration, at the physical level we can have three different main types of storage:

Block-based storage acceded by a hardware adapter: This includes DAS storage or a SAN FC storage.

Block-based storage acceded by a software adapter: This is like the SAN iSCSI storage when the software initiator is used. In this case, you need first to properly configure the network connectivity. After that, it becomes very similar to the first case.

NFS storage: This is where you have to configure first the IP network connectivity to your storage and then connect the NFS datastore.

For the physical storage adapters, VMware ESXi supports several types of protocols and technologies (refer to the hardware compatibility list to check the supported level):

Fibre Channel Host Bus Adapter (FC HBA): This is the common and historical way to implement an FC-based storage, but using a dedicated full fabric.

iSCSI HBA: These are specialized PCIe cards that implement completely in hardware the entire iSCSI stack, reducing the load of the host CPU.

CNA adapters for FCoE or iSCSI: These are mostly 10 Gbps (or greater) Ethernet adapters providing hardware (or hardware assisted) FCoE or iSCSI functionality on converged (or also dedicated) networks.

RDMA over Converged Ethernet (RoCE): This is a network protocol that allows remote direct memory access (RDMA) over an Ethernet network. Starting with vSphere 6.5, RoCE certified adapters could be used for converged networks. InfiniBand HCA: Mellanox Technologies InfiniBand HCA device drivers are available directly from Mellanox Technologies. Mostly used for the network part instead of the storage part, they could be interesting in converged networks, and also in vSAN implementation.

This tutorial is an excerpt from "Mastering VMware vSphere 6.5" by Andrea Mauro, Paolo Valsecchi & Karel Novak and published by Packt. Get the ebook for just $9 until Aug. 31.

About the Author(s)

You May Also Like