Each Docker container has its own network stack. This is due to the Linux kernel NET namespace, where a new NET namespace for each container is instantiated and cannot be seen from outside the container or from other containers.

Docker networking is powered by the following network components and services.

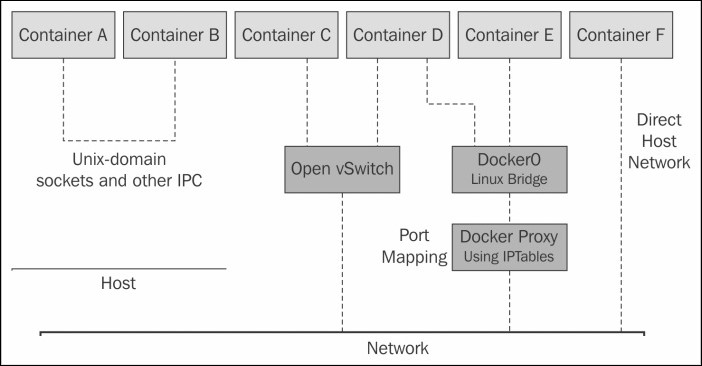

- Linux bridges: These are L2/MAC learning switches built into the kernel and are to be used for forwarding.

- Open vSwitch: This is an advanced bridge that is programmable and supports tunneling.

- NAT: Network address translators are immediate entities that translate IP addresses and ports (SNAT, DNAT, and so on).

- IPtables: This is a policy engine in the kernel used for managing packet forwarding, firewall, and NAT features.

- AppArmor/SELinux: Firewall policies for each application can be defined with these.

Various networking components can be used to work with Docker, providing new ways to access and use Docker-based services. As a result, we see a lot of libraries that follow a different approach to networking. Some of the most prominent ones are Docker Compose, Weave, and Kubernetes. The following figure depicts the root ideas of Docker networking:

Docker networking is at a very early stage, and there are many interesting contributions from the developer community, such as Pipework and Clocker. Each of them reflects a different aspect of Docker networking. However, when it comes to networking, the Docker team have established a new project where networking will be standardized. This is called libnetwork.

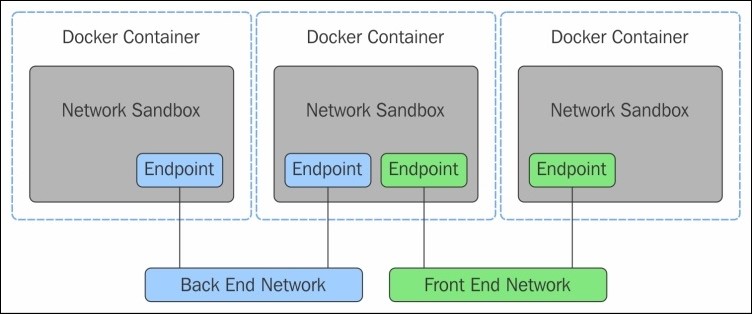

libnetwork implements the container network model (CNM), which formalizes the steps required to provide networking for containers while providing an abstraction that can be used to support multiple network drivers. The CNM is built on three main components—sandbox, endpoint, and network. Let’s take a quick look at them.

- Sandbox

A sandbox contains the configuration of a container's network stack. This includes management of the container's interfaces, routing table, and DNS settings. An implementation of a sandbox could be a Linux network namespace, a FreeBSD jail, or other similar concept. A sandbox may contain many endpoints from multiple networks.

- Endpoint

An endpoint connects a sandbox to a network. An implementation of an endpoint could be a veth pair, an Open vSwitch internal port, or something similar. An endpoint can belong to only one network but may only belong to one sandbox.

- Network

A network is a group of endpoints that are able to communicate with each other directly. An implementation of a network could be a Linux bridge, a VLAN, and so on. Networks consist of many endpoints, as shown in the following diagram:

The CNM provides the following contract between networks and containers:

- All containers on the same network can communicate freely with each other

- Multiple networks are the way to segment traffic between containers and should be supported by all drivers

- Multiple endpoints per container are the way to join a container to multiple networks

- An endpoint is added to a network sandbox to provide it with network connectivity

If you want to learn more about Docker networking, check out "Learning Docker Networking," by Rajdeep Dua, Vaibhav Kohli, and Santosh Kumar Konduri, and published by Packt. Network Computing readers can get a 50% discount here with the promo code RG50LDN through Nov. 30, 2016.