Weekly Roundup: NVIDIA Addresses AI Infrastructure Issues

NVIDIA announcements this week, like other industry efforts this year, aim to speed up Ethernet networking in an AI infrastructure.

November 25, 2023

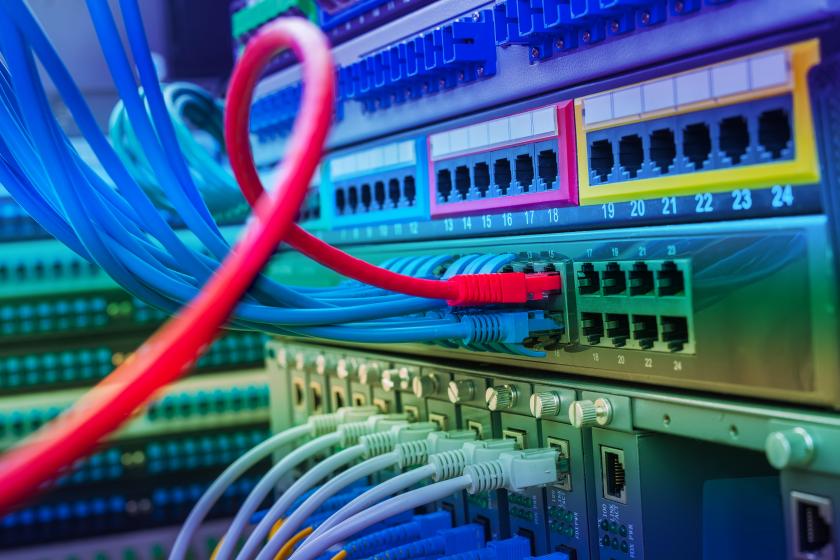

The rapid adoption of artificial intelligence and its growing use is stressing traditional IT infrastructures. As we’ve reported, enterprises that must keep AI and machine learning model training operations on-premises to ensure data privacy and protect intellectual property need to make significant changes covering everything, including processors, core networking elements, power consumption, and more. Cloud providers offering AI services face similar issues. This week, NVIDIA tried to address such issues in two ways.

In one announcement, the company introduced an infrastructure accelerator called a SuperNIC. In a backgrounder on the device, NVIDIA described it as a “new class of networking accelerator designed to supercharge AI workloads in Ethernet-based networks." It offers some features and capabilities similar to SmartNICs, data processing units (DPUs), and infrastructure processing units (IPUs).

The SuperNIC is designed to provide ultra-fact networking for GPU-to-GPU communications. It can reach speeds of 400 Gb/s. The technology that enables this acceleration is remote direct memory access (RDMA) over converged Ethernet, which is called RoCE.

The device performs several special tasks that all contribute to improved performance. These tasks or attributes include high-speed packet reordering, advanced congestion control using real-time telemetry data and network-aware algorithms to manage and prevent congestion in AI networks, full-stack AI optimization, and programmable compute on the input/output (I/O) path.

Keeping the AI infrastructure focus on Ethernet

There are other high-performance networking technologies (e.g., InfiniBand) for the cloud or on-premises data centers besides Ethernet. These technologies are often optimal for special high-performance computing workloads. They are more expensive than Ethernet. And there are fewer networking professionals that have expertise in these technologies.

So, given that most enterprises will stick with Ethernet, there have been several industry efforts to fine-tune Ethernet to be used in demanding AI infrastructures.

Earlier this year, we reported on the formation of the Ultra Ethernet Consortium (UEC), which aims to speed up AI jobs running over Ethernet by building a complete Ethernet-based communication stack architecture for AI workloads.

NVIDIA’s other news this week was in a similar area. Specifically, it announced that Dell Technologies, Hewlett Packard Enterprise, and Lenovo will be the first to integrate NVIDIA Spectrum-X Ethernet networking technologies for AI into their server portfolios to help enterprise customers speed up generative AI workloads.

The networking solution is purpose-built for generative AI. According to the company, it offers enterprises a new class of Ethernet networking that can achieve 1.6x higher networking performance for AI communication versus traditional Ethernet offerings.

Long live Ethernet

The two NVIDIA announcements, the work of the Ultra Ethernet Consortium, and other industry efforts highlight the endurance of Ethernet. The work points to the desire by enterprises and cloud hyperscalers to keep using the technology.

To put the technology’s staying power into perspective, just consider that 2023 marks the 50th anniversary of its birth. Those interested in its history should read two pieces marking the occasion. The first is from the IEEE Standards Association, and the other is from IEEE Spectrum.

Related articles:

About the Author

You May Also Like