The Serverless Security Shift

Serverless security doesn't have to be a struggle if you pay attention to the apps and focus on securing them.

May 23, 2019

Security in the cloud has always followed a shared responsibility model. What the provider manages, the provider secures. What the customer deploys, the customer secures. Generally speaking, if you have no control over it in the cloud, then the onus of securing it is on the provider.

Serverless, which is kind of like a SaaS-hosted PaaS (if that even makes sense), extends that model to reach higher in the stack. That extension leaves the provider with most of the responsibility for security with very little left for the customer.

The problem is that the 'very little left' actually carries the bulk of risk, especially when we consider Function as a Service (FaaS).

Serverless shrinks the responsibility stack

Serverless seeks to eliminate (abstract away) even more of the application stack, leaving very little for the customer (that's you) to secure. On the one hand, that seems like a good thing. After all, if you have only the application layer (layer 7) to worry about securing, that should be easier than trying to secure the application layer, the platform (web or app server), and its operating system.

Serverless-image1.jpg

Serverless expands entry points

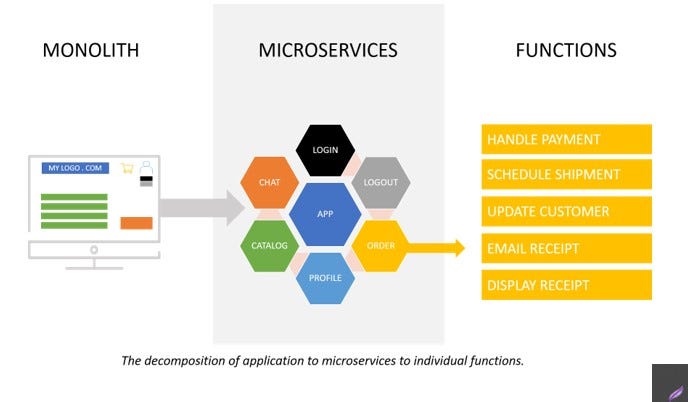

But the thing is that serverless may constrict the vertical depth into the stack you need to protect, but it simultaneously broadens the horizontal surface by introducing greater decomposition and distribution of that layer.

What this means is that you need to apply security on a function by function basis. To put that in perspective for non-developer types, there are hundreds (thousands even) of functions per application. So instead of multiple products to secure, you have multiple points of entry to secure.

Serverless-image2.jpg

But wait, there's more.

Each of those functions may be invoking external services or loading externally sourced components. That's nearly a given because modern applications leverage open source components on average for about 80% of the total functionality of an app. That means your responsibility is not only to secure the code your developers write, but other developers as well. That's no small ask. Studies show (through scans and analysis of those components) that there has been an 88% growth rate in app vulnerabilities in such packages over the past two years. (Source: Snyk, 2019 State of Open Source Security)

The increase in dependency on externally sourced components combined with the broadening of the attack surface thanks to decomposition means that securing serverless apps must focus on the code itself. This requires a shift away from after delivery, network-deployed services and into the CI/CD pipeline.

It means continuous security scans - static and dynamic. It means code reviews. It means increasing attention to what components are used and from where they are obtained.

It means employing more security practices earlier in the development cycle. To be cliché, it means shifting security left.

But that doesn't mean there aren't more traditional security options available to secure serverless apps. It turns out that you can use familiar security services with serverless to protect and defend against attacks.

Traditional security and serverless

Web application firewalls are designed to intercept, scan, and evaluate application layer requests. That means HTTP-based messages, which are pretty much the bulk of serverless functions today. By forcing function calls (serverless requests) through a web application firewall, you can provide an extra layer of assurance that the inbound request isn't carrying malicious data/code.

API gateways, too, are expanding beyond management functions to include security. An API gateway or API security service can provide similar capabilities as that of a WAF. There are many cloud-native and traditional API security options you can take advantage of to secure serverless applications.

You'll note that regardless of what methods you employ to secure serverless apps, you are going to need to be familiar with app layer concepts and technologies. That means fluency in HTTP. That means being able to recognize imports of externally sourced components and questioning their status. It means creating a new checklist for your "go live gate" that focuses almost entirely on the application and virtually ignores anything in the stack below it.

Modern means apps

Most of the modern and emerging architectures and deployment models, like serverless, are heavily tilted toward applications. That's unsurprising in the era of application capital, where apps can make or break businesses. That focus should be reflected in IT and, in particular, security. That means more attention to securing the application layer whether deployed as functions in a serverless model or in the data center.

Serverless security doesn't have to be a struggle if you pay attention to the apps and focus on securing them.