Using FPGAs to Survive the Death of Moore’s Law, Part 1

Chips built for specific tasks, such as the Tensor Processing Unit for Deep Neural Network Inference tasks, may be the key to meeting the processing needs of new applications.

November 11, 2019

One of the most cherished premises of the computing age, Moore’s Law, has been quietly eroding for years under the influence of another maxim, the law of diminishing returns. The tech industry has been operating as if Gordon Moore’s prediction regarding the doubling of processing power every 18 months were an immutable law. Many of its current innovations rely on this increase in server CPU power. Since it’s become obvious that this prediction no longer holds up, the industry must re-assess what to do about its processing problem.

Moore’s Law Is No More

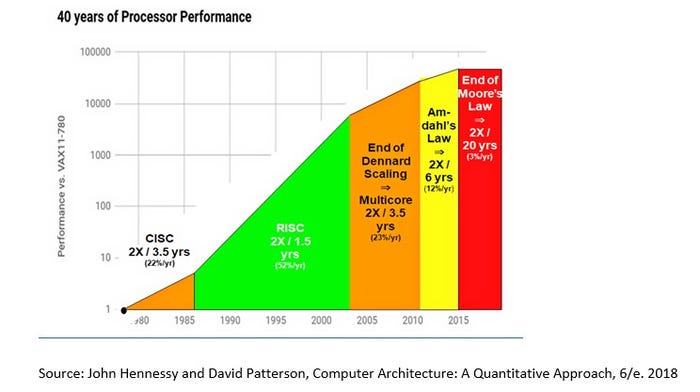

It’s not as if someone flipped a switch, and Moore’s Law suddenly vanished. Its validity has been in decline for a while now, but evidence of that is just now coming to the fore. That’s because of several activities that prolonged the performance curve. It is not just Moore’s Law that is coming to an end with respect to processor performance but also Dennard Scaling and Amdahl’s Law. Processor performance over the last 40 years and the decline of these laws is displayed in the graph below:

Moores law.jpg

Moore based his famous maxim on the arrival of RISC computing in the 1980s, which indeed showed a doubling in processor performance every 18 months. But, as the limits of clock frequency per chip began to appear, the use of Dennard scaling and multicore CPUs helped prolong the performance curve. But it is important to note that even at the start of the century, we were no longer on the Moore’s Law curve, and doubling of performance took 3.5 years during this time.

While parallelizing the execution of a process can provide an initial performance boost, there will always be a natural limit, as there are some execution tasks that cannot be parallelized. This is the point of Amdahl’s Law. These limits come into effect when the benefits of using multiple CPU cores decrease, leading to an even longer time span between performance improvements.

In a sense, computer processing is a victim of its own success. As the graph shows, the new prediction is that it will now take 20 years for CPU processing power to double in performance. Hence, Moore’s Law is officially dead.

Rethinking Processing Power

The confidence in ever-doubling processing power became a given, spawning entire industries that now may be left in the lurch. For example, the software industry assumes that processing power will increase in line with data growth and will be capable of servicing the processing needs of the software of the future. Therefore, efficiency in software architecture and design is less integral. Indeed, there is an ever-increasing use of software abstraction layers to make programming and scripting more user-friendly but at the cost of processing power.

A good example is the widespread use of virtualization; it lifts one burden while creating another. It is a software abstraction of underlying physical resources that creates an additional processing cost. On the one hand, virtualization makes more efficient use of hardware resources, but, on the other hand, the reliance on server CPUs as generic processors for both virtualized software execution and processing of input/output data places a considerable burden on CPU processors.

The cloud industry and, in its footsteps, the telecom industry, provides a helpful example of the consequences of this burden. The cloud industry has been founded on the premise that standard Commercial-Off-The-Shelf (COTS) servers are powerful enough to process any type of computing workload. Using virtualization, containerization, and other abstractions, it is possible to share server resources amongst multiple tenant clients with “as-a-service” models.

Based on the initial success of cloud providers, telecom providers then replicated this approach for their networks with initiatives such as SDN, NFV, and cloud-native computing. However, the underlying business model assumption here is that as the number of clients and volume of work increases, all that is needed is to simply add more servers.

However, as the above graph clearly shows, server processing performance will only grow three percent per year over the next 20 years. This is far below the expectation that the amount of data to be processed will triple over the next five years.

Accelerating Hardware

Since the reduction of processor performance has been happening for about two decades, why haven’t its effects been more obvious? Cloud companies seem to be succeeding without any signs of performance issues. The answer is hardware acceleration.

The pragmatism that led cloud companies to be successful also influenced their reaction to this challenge. If server CPU performance power will not increase as expected, then they would need to add processing power. In other words, there is a need to accelerate the server hardware.

John Hennessey and David Patterson, winners of the Turing Award and authors of “Computer Architecture: A Quantitative Approach,” address the end of Moore’s Law. They point to the example of Domain Specific Architectures (DSA), which are purpose-built processors that accelerate a few application-specific tasks.

What makes DSA appealing is the ability to tailor different kinds of processors to the needs of specific tasks rather than using general-purpose processors like CPUs to process a multitude of tasks. An example they use is the Tensor Processing Unit (TPU) chip built by Google for Deep Neural Network Inference tasks. The TPU chip was built specifically for this task, and since this is central to Google’s business, it makes perfect sense to offload to a specific processing chip.

Cloud providers need to accelerate their workloads and have found ways to use acceleration technologies of one type or another. For instance, Graphics Processing Units (GPUs) have been adapted to support multiple applications; many cloud companies use them for hardware acceleration. And for networking, Network Processing Units (NPUs) have been widely used. Common to both of these options is a massive number of smaller processors that can break down workloads and parallelize them to run on a series of these smaller processors.

(FPGA), is being used to help accelerate workloads. Could FPGA present an effective remedy in light of the sunsetting of Moore’s Law? Field Programmable Gate ArraysThe conclusion of this article will look at how a technology as old as Moore’s Law,

About the Author

You May Also Like