Gen-Z Promises Performance Boost

Industry consortium is developing a new interconnect to avoid storage bottlenecks.

December 1, 2016

It’s been apparent to the industry for some time that the ability to link DRAM storage to CPUs and peripheral devices is running into both bandwidth and physical connection count limitations. These two issues in fact are closely intertwined. As we saw years ago in disk drives, parallel interfaces are limited by the ability to clock all the signals at the same time. The more connections, the more difficult this gets, while increasing the clock rate also runs into a wall at a relatively low frequency.

The performance ceiling with both the DRAM bus and PCIe is a serious issue in systems design. CPUs are starved for memory access, which has led the industry to devise multi-layer caches in the CPUs to mask access latency. Double channel memory systems try to address this issue, but this means also doubling the already high pinout for the memory ports on the CPU chip, which is expensive.

The industry is searching for a way out. One approach, called Gen-Z by a consortium of notable technology vendors such as Hewlett Packard Enterprise, IBM, and Huawei, plans to use serial interfaces to talk to the memory devices instead of the clumsy and limited parallel interfaces used today. It will also address the peripheral to memory bottleneck.

This technology will open up a spectrum of opportunities for building systems more powerful than today’s COTS architecture. The serial design allows flexibility in channel count and, though each individual channel will be slower than the traditional parallel memory, the total bandwidth of the memory will soar by taking advantage of many channels.

Like SAS expanded connectivity versus the short distance of SCSI, Gen-Z opens up the distance from the CPU to the memory module. It can be configured as a low-power signal to communicate from a CPU to on-module or nearby memory, saving a good part of the power currently used by DRAM, or the signals can be re-powered or translated to a network protocol such as Ethernet of InfiniBand to drive out over a copper or fiber fabric.

With memory using as much power as a CPU, current architectures are limited in DRAM capacity. The Gen-Z low-power approach will reduce memory power to around 20%, which allows memories to have much larger capacity. At the same time, the increased bandwidth can be matched by an uptick in CPU core count, since the low-power driver transistors also reduce CPU heat production.

Cooler, faster systems with much more memory? These are great choices for in-memory database machines and servers with huge numbers of containers. To add spice to the cake, some of the memory will be flash or 3D X-Point persistent storage. This non-volatile memory initially is block accessed storage, but the consortium is also looking at an advanced byte-level access mode, which would be much faster.

To achieve byte-mode operation to non-volatile memory, where register-memory CPU operations transfer data without the need for a complex, slow file-IO stack and a minimum 4KB transfer, requires quite a bit of system software redesign, from compilers to link editors to cache management hardware. It also implies a partial rewrite of apps to take advantage of the instant-write feature, but the paybacks are enormous. Clearly, this is a major effort and most likely it won’t appear in the first products.

The fabric aspect of the architecture is very important -- it makes it possible to connect both DRAM and persistent memory to other servers. This fits that virtual SAN model used in hyperconverged solutions really well, avoiding the bottlenecks and latencies caused by passing data though PCIs and the system cache.

The fabric connections to other servers could be achieved by re-driving the Gen-Z serial connections, but since these are functionally similar to PCIe links, the more likely model is to use a translator chip to InfiniBand or Ethernet.

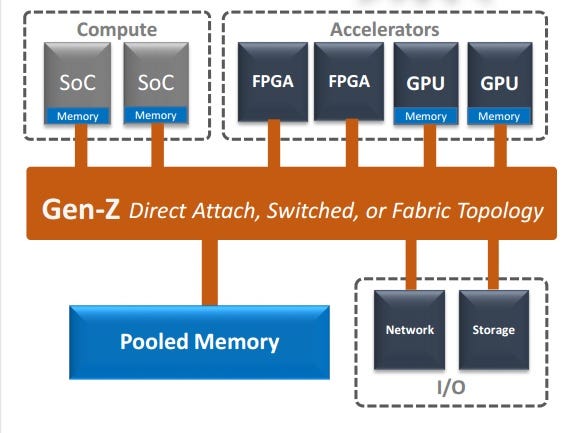

Gen Z.jpg

The Gen-Z architecture uses a switch chip to link the system components together. This opens up the possibility for closely coupled solutions with more than one CPU all sharing the same memory spaces. Even more important is the idea that these could just as easily be GPU chips or FPGA accelerators. Additional persistent memory in the form of solid-state drives could also use this interface, just like NVMe uses PCIe today.

While Gen-Z offers the promise of a huge boost in performance, there are complications. Intel, the elephant in the room, is not in the consortium. Moreover, Gen-Z has two similar but competitive alternatives being discussed. These are Open Coherent Accelerator Processor Interface (Open CAPI), driven by IBM with plans for a Power9 release next year, and Cache Coherent Interconnect for Accelerators (CCIX).

It’s notable that most of the members of these special interest groups are the same players, all without Intel. With Gen-Z, OpenCAPI and CCIX in play, why three overlapping solutions? It’s to pressure Intel on a standard usable by all players, rather than an Intel-only solution or one they have to play catch-up on.

Does that mean Gen-Z is all a pipe dream? The reality is one of these standards, or something like it, is coming to your servers around the end of next year. Add in on-chip memory, where the CPU becomes a module with a large amount of its own close-coupled DRAM, and systems in 2018 won’t look much like today’s servers. With really big increases in performance possible, this may be the way Moore’s Law plays out in the next decade.

About the Author

You May Also Like