Facebook Breaks New Ground In Cold Storage

Here's how Facebook is taking an innovative approach to storing billions of user photos in its data centers.

May 12, 2015

With the changing mission of storage and the advent of a new tiering system with all-flash arrays and SSDs, we are starting to see major innovations in the way storage is delivered and managed. The cloud is a strong contributor to these changes that driven by cost and a requirement to operate at huge scale.

Facebook is at forefront of these changes and recently gave us a very interesting look at its latest take on how to store the billions of photographs that users have uploaded. The company gets over 2 billion photo uploads per day, but prides itself on never erasing these photos, even though most users typically won’t be looking at them after a few months.

This is an example of the perennial cold storage problem: balancing the cost and space to keep valuable information (in this case people’s memories) without breaking the bank or buying up all the real estate in Kansas. Every major corporation has this problem, due to the constraints of HIPAA , SOX and other regulations, and governments hate to throw anything away -- just ask the NSA!

Facebook is one of the few organizations that's open about many of its creative initiatives. Backing the Open Compute project with server, storage, and network designs is just part of this. The new release is on a much bigger scale. It represents a truly innovative approach to data centers specifically designed for cold storage, with very low power usage, an efficient use of hardware, and a tight footprint coupled with rapid data recovery.

In Facebook’s case, the need to deliver any photo within seconds means that old photos aren’t completely relegated to cold storage. A copy is kept in hot storage, to be delivered with the low latency users need.

Aiming to minimize power use, but able to recover large blocks of photos in a short time after a loss in their hot storage, these data centers needed disk storage “shells” that powered off most of the spinning disks. Facebook made this the cornerstone of its approach. The two storage servers in each rack were modified to only allow one drive to power up at any time.

This allowed a big reduction in fan count and fewer power supplies wasting power when idling, while the low throughput requirements allowed the servers to handle 240 drives each. As a further saving, power supply redundancy and uninterruptible power supplies aren’t provided, and backup power isn’t used either.

The result is that these data centers need just a sixth of the power delivered to a traditional data center, which is a huge saving in an era where IT gear is threatening to eat up the gross power production of the planet. Facebook's statistics are impressive: less than 2KW for a fully loaded rack with 2 PB of storage, and 15x less power per square foot, which together allows the use of much lower cost power infrastructure.

This effort involved some top-to bottom analysis of the storage systems. Changes had to be made to the controller firmware to prevent the powering up of multiple drives, and the appliances boot without spinning any drives up, limiting the possibility of power surges due to boot storms. With 15 4TB drives in each 2U appliance, a rack can hold two petabytes, and the whole data center can support an exabyte of storage in 500 racks.

Facebook ran into interesting challenges, such as the one-ton weight of a loaded rack flattening the castors so the racks can’t be rolled into position. At exabyte scale, this is actually a serious issue, since it means staging racks couldn’t be done off the production floor or at a third-party site. It also limits the total number of drives in a rack.

Without redundancy in the power systems, a power outage means the storage will go offline. Facebook gets around this with software. First, the software stacks are designed to minimize recovery time and eliminate single points of failure.

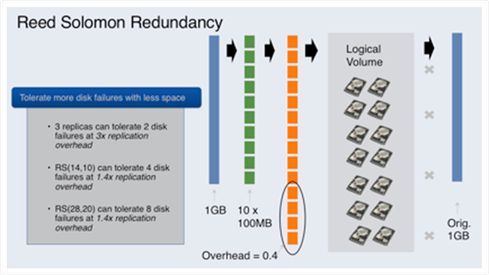

Figure 1:

Data is stored using erasure coding, where a few extra blocks containing an advanced form of parity are added to the data. This allows data to be recovered from as few as 10 out of 14 drives in a set, while having the inestimable value of allowing geographic dispersion of the drives to allow for a zone to be lost through power failure or other outage. All of the drives are scanned each month for hard errors or failures. This protects against a problem of idle data, which requires you to read it to see if it is still there.

Facebook's coding scheme is variable, depending on the changing environment. With two cold storage data centers open, full geo-diversity isn’t achieved (but remember, there is always a hot copy, too). When the third cold store opens, a ratio of 10: 15 will probably be used and the data stored as three sets of five chunks, which would allow for five drive failures or a zone outage. Facebook also is looking at using a 10:12 scheme in each data center as a way to increase local reliability and reduce inter-zone recovery times.

So, with all of this innovation, is anything left on the table to be done? The appliance has 15 drives in 2U -- by no means the densest solution on the market. Boxes with 80 drives in 4U are available, but there is a premium involved, and installation requires two people to do the job, and rack slides and other costs.

It should be possible, given the low power usage, to trade a couple of drives for a tiny power supply and controller, and get to 12 drives per U, instead of 7.5. According to Kestutis Patiejunas, one of the authors of Facebook's blog, this would increase the weight of the rack too much.

Figure 2:

Looking at the appliance photos, it’s obvious that a fair amount of work was needed to mount the drives in caddies prior to installation. It’s a fair bet that Facebook maintains them by either removing a shell or a whole rack and then sending failed gear off to be renovated or sold. Caddies cost a good few dollars and they could be dispensed with completely.

In fact, a challenge to the whole SSD industry is to reinvent drive mounting to reduce hardware and installation cost and increase packing density in these scale-out storage systems. With SSDs slated to take over leadership from spinning disks in 2016/2017, packing densities per appliance could go up, maybe to 24 drives per U in this type of box, without violating weight limits.

When the drive capacity sweet spot reaches 10 TB, these racks will be able to hold 5 PB each and, if the drive count issues are addressed, we could reach 15 PB per rack, while still holding to the same power profile. That’s plenty of room for improvement, but this is still a stellar effort. Hats off to Facebook for thinking outside the box and for sharing the results with us!

About the Author

You May Also Like