AWS Cloud Services Overview: What's New in 2018

Amazon offers a multitude of options in its AWS cloud services lineup, and much has changed since they were first introduced. This overview from AWS Administration - The Definitive Guide explains the most popular core services and what's new in the latest versions.

September 20, 2018

I still remember the time when I first started exploring AWS cloud services way back in 2009, when it was the early days for the likes of EC2 and CloudFront. Amazon was still adding new features to them and SimpleDB and VPC were just starting to take shape; the thing that really amazes me is how far the platform has come today. With more than 50 different solutions and service offerings ranging from big data analytics, to serverless computing, to data warehousing and ETL solutions, digital workspaces and code development services, AWS cloud services have got it all. It's not only about revenue and the number of customers, but how well a service adapts and evolves to changing times and demands.

Improvements in existing AWS cloud services

There have been quite a few improvements AWS cloud services that were covered back in the first edition of AWS Administration - The Definitive Guide. Today, we will highlight a few of these essential improvements and understand their uses. To start off, let's look at some of the key enhancements made in EC2 over the past year or two.

Elastic Compute Cloud

Elastic Compute Cloud (EC2) is by far one of the oldest running services in AWS, and yet it still continues to evolve and add new features as the years progress. Some of the notable feature improvements and additions are mentioned here:

Introduction of the t2.xlarge and t2.2xlarge instances: The t2 workloads are a special type of workload, as they offer a low-cost burstable compute that is ideal for running general purpose applications that don't require the use of CPU all the time, such as web servers, application servers, LOB applications, development, to name a few. The t2.xlarge and t2.2xlarge instance types provide 16 GB of memory and 4 vCPU, and 32 GB of memory and 8 vCPU respectively.

Introduction of the I3 instance family: Although EC2 provides a comprehensive set of instance families, there was a growing demand for a specialized storage-optimized instance family that was ideal for running workloads such as relational or NoSQL databases, analytical workloads, data warehousing, Elasticsearch applications, and so on. Enter I3 instances! I3 instances are run using non-volatile memory express (NVMe) based SSDs that are suited to provide extremely optimized high I/O operations. The maximum resource capacity provided is up to 64 vCPUs with 488 GB of memory, and 15.2 TB of locally attached SSD storage.

This is not an exhaustive list in any way. If you would like to know more about the changes brought about in AWS, check out the AWS website.

Availability of FPGAs and GPUs

One of the key use cases for customers adopting the public cloud has been the availability of high-end processing units that are required to run HPC applications. One such new instance type added last year was the F1 instance, which comes equipped with field programmable gate arrays (FPGAs) that you can program to create custom hardware accelerations for your applications. Another awesome feature to be added to the EC2 instance family was the introduction of the Elastic GPUs concept. This allows you to easily provide graphics acceleration support to your applications at significantly lower costs but with greater performance levels. Elastic GPUs are ideal if you need a small amount of GPU for graphics acceleration, or have applications that could benefit from some GPU, but also require high amounts of compute, memory, or storage.

Simple Storage Service

Similar to EC2, Simple Storage Service (S3) has had its own share of new features and support added to it. Some of these are explained here:

S3 Object Tagging: S3 Object Tagging is like any other tagging mechanism provided by AWS, used commonly for managing and controlling access to your S3 resources. The tags are simple key-value pairs that you can use for creating and associating IAM policies for your S3 resources, to set up S3 life cycle policies, and to manage transitions of objects between various storage classes.

S3 Inventory: S3 Inventory was a special feature provided with the sole purpose of cataloging the various objects and providing that as a useable CSV file for further analysis and inventorying. Using S3 Inventory, you can now extract a list of all objects present in your bucket, along with its metadata, on a daily or weekly basis.

S3 Analytics: A lot of work and effort has been put into S3 so that it is not only used just as another infinitely scalable storage. S3 Analytics provides end users with a medium for analyzing storage access patterns and defines the right set of storage class based on these analytical results. You can enable this feature by simply setting a storage class analysis policy, either on an object, prefix, or the entire bucket as well. Once enabled, the policy monitors the storage access patterns and provides daily visualizations of your storage usage in the AWS Management Console. You can even export these results to an S3 bucket for analyzing them using other business intelligence tools of your choice, such as Amazon QuickSight.

S3 CloudWatch metrics: It has been a long time coming, but it is finally here! You can now leverage 13 new CloudWatch metrics specifically designed to work with your S3 buckets objects. You can receive one minute CloudWatch metrics, set CloudWatch alarms, and access CloudWatch dashboards to view real-time operations and the performance of your S3 resources, such as total bytes downloaded, number of 4xx HTTP response counts, and so on.

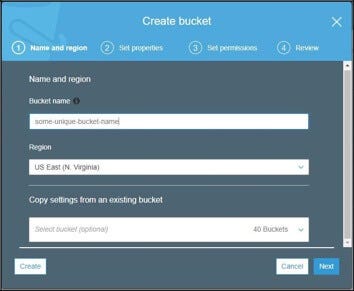

Brand new dashboard: Although the dashboards and structures of the AWS Management Console change from time to time, it is the new S3 dashboard that I'm really fond of. The object tagging and the storage analysis policy features are all now provided using the new S3 dashboard, along with other impressive and long-awaited features, such as searching for buckets using keywords and the ability to copy bucket properties from an existing bucket while creating new buckets, as depicted in the following screenshot:

AWS-bucket.jpg

Amazon S3 transfer acceleration: This feature allows you to move large workloads across geographies into S3 at really fast speeds. It leverages Amazon CloudFront endpoints in conjunction with S3 to enable up to 300 times faster data uploads without having to worry about any firewall rules or upfront fees to pay.

Virtual Private Cloud

Similar to other services, Virtual Private Cloud (VPC) has seen quite a few functionalities added to it over the past years; a few important ones are highlighted here:

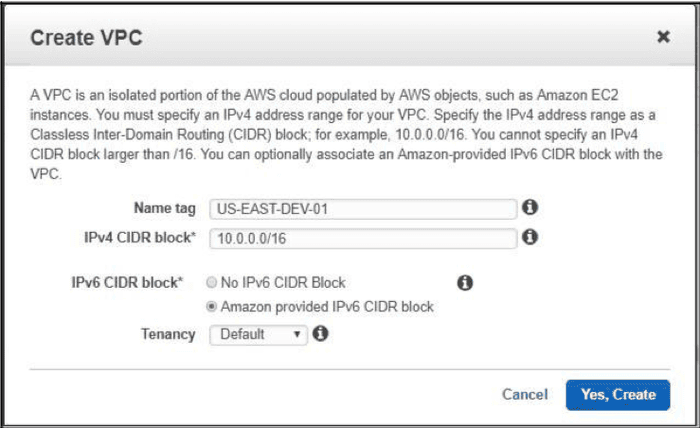

Support for IPv6: With the exponential growth of the IT industry as well as the internet, it was only a matter of time before VPC too started support for IPv6. Today, IPv6 is extended and available across all AWS regions. It even works with services such as EC2 and S3. Enabling IPv6 for your applications and instances is an extremely easy process. All you need to do is enable the IPv6 CIDR block option, as depicted in the VPC creation wizard:

AWS-create-VPC.png

DNS resolution for VPC Peering: With DNS resolution enabled for your VPC peering, you can now resolve public DNS hostnames to private IP addresses when queried from any of your peered VPCs. This actually simplifies the DNS setup for your VPCs and enables the seamless extension of your network environments to the cloud.

VPC endpoints for DynamoDB: Yet another amazing feature to be provided for VPCs later this year is the support for endpoints for your DynamoDB tables. Why is this so important all of a sudden? Well, for starters, you don't require internet gateways or NAT instances attached to your VPCs if you are leveraging the endpoints for DynamoDB. This essentially saves costs and makes the traffic between your application to the DB stay local to the AWS internal network, unlike previously where the traffic from your app would have to bypass the internet in order to reach your DynamoDB instance. Secondly, endpoints for DynamoDB virtually eliminate the need for maintaining complex firewall rules to secure your VPC. And thirdly, and most importantly, it's free!

CloudWatch

CloudWatch has undergone a lot of new and exciting changes and feature additions compared to what it originally provided as a service a few years back. Here's a quick look at some of its latest announcements:

CloudWatch events: One of the most anticipated and useful features added to CloudWatch is CloudWatch events! Events are a way for you to respond to changes in your AWS environment in near real time. This is made possible with the use of event rules that you need to configure, along with a corresponding set of actionable steps that must be performed when that particular event is triggered. For example, designing a simple back-up or clean-up script to be invoked when an instance is powered off at the end of the day, and so on. You can, alternatively, schedule your event rules to be triggered at a particular interval of time during the day, week, month, or even year! Now that's really awesome!

High-resolution custom metrics: We have all felt the need to monitor our applications and resources running on AWS at near real time, however, with the least amount of configurable monitoring interval set at 10 seconds, this was always going to be a challenge. But not now! With the introduction of the high-resolution custom metrics, you can now monitor your applications down to a 1-second resolution! The best part of all this is that there is no special difference between the configuration or use of a standard alarm and that of a high resolution one. Both alarms can perform the exact same functions, however, the latter is much faster than the other.

CloudWatch dashboard widgets: A lot of users have had trouble adopting CloudWatch as their centralized monitoring solution due to its inability to create custom dashboards. But all that has now changed as CloudWatch today supports the creation of highly-customizable dashboards based on your application's needs. It also supports out-of-the box widgets in the form of the number widget, which provides a view of the latest data point of the monitored metric, such as the number of EC2 instances being monitored, or the stacked graph, which provides a handy visualization of individual metrics and their impact in totality.

Elastic Load Balancer

One of the most significant and useful additions to ELB over the past year has been the introduction of the Application Load Balancer. Unlike its predecessor, the ELB, the Application Load Balancer is a strict Layer 7 (application) load balancer designed to support content-based routing and applications that run on containers as well. The ALB is also designed to provide additional visibility of the health of the target EC2 instances as well as the containers. Ideally, such ALBs would be used to dynamically balance loads across a fleet of containers running scalable web and mobile applications.

This is just the tip of the iceberg compared to the vast plethora of services and functionality that AWS has added to its services in just a span of one year! Let's quickly glance through the various services you may find useful.

Introduction of newer AWS cloud services

The first edition of AWS Administration - The Definitive Guide covered a lot of the core AWS services, such as EC2, EBS, Auto Scaling, ELB, RDS, S3, and so on. In addition you should explore a lot of the services and functionalities that work in conjunction with the core services:

EC2 Systems Manager: EC2 Systems Manager is a service that basically provides a lot of add-on features for managing your compute infrastructure. Each compute entity that's managed by EC2 Systems Manager is called a managed instance and this can be either an EC2 instance or an on-premise machine! EC2 Systems Manager provides out-of-the-box capabilities to create and baseline patches for operating systems, automate the creation of AMIs, run configuration scripts, and much more!

Elastic Beanstalk: Beanstalk is a powerful yet simple service designed for developers to easily deploy and scale their web applications. At the moment, Beanstalk supports web applications developed using Java, .NET, PHP, Node.js, Python, Ruby, and Go. Developers simply design and upload their code to Beanstalk ,which automatically takes care of the application's load balancing, auto-scaling, monitoring, and so on. At the time of writing, Elastic Beanstalk supports the deployment of your apps using either Docker containers or even directly over EC2 instances, and the best part of using this service is that it's completely free! You only need to pay for the underlying AWS resources that you consume.

Elastic File System: The simplest way to define Elastic File System, or EFS, is an NFS share on steroids! EFS provides simple and highly scalable file storage as a service designed to be used with your EC2 instances. You can have multiple EC2 instances attach themselves to a single EFS mount point which can provide a common data store for your applications and workloads.

WAF and Shield: There are quite a few security and compliance providing services that provide an additional layer of security besides your standard VPC. Two such services we will learn about are WAF and Shield. WAF, or Web Application Firewall, is designed to safeguard your applications against web exploits that could potentially impact their availability and security maliciously. Using WAF you can create custom rules that safeguard your web applications against common attack patterns, such as SQL injection, cross-site scripting, and so on.

Similar to WAF, Shield is also a managed service that provides security against DDoS attacks that target your website or web application:

CloudTrail and Config: CloudTrail is yet another service that we will learn about in the coming chapters. It is designed to log and monitor your AWS account and infrastructure activities. This service comes in really handy when you need to govern your AWS accounts against compliances, audits, and standards, and take necessary action to mitigate against them. Config, on the other hand, provides a very similar set of features, however, it specializes in assessing and auditing the configurations of your AWS resources. Both services are used synonymously to provide compliance and governance, which help in operational analysis, troubleshooting issues, and meeting security demands.

Cognito: Cognito is an awesome service which simplifies the build and creation of sign-up pages for your web and even mobile applications. You also get options to integrate social identity providers, such as Facebook, Twitter, and Amazon, using SAML identity solutions.

CodeCommit, CodeBuild, and CodeDeploy: AWS provides a really rich set of tools and services for developers, which are designed to deliver software rapidly and securely. At the core of this are three services that we will be learning and exploring in this book, namely CodeCommit, CodeBuild, and CodeDeploy. As the names suggest, the services provide you with the ability to securely store and version control your application's source code, as well as to automatically build, test, and deploy your application to AWS or your on-premises environment.

SQS and SNS: SQS, or Simple Queue Service, is a fully-managed queuing service provided by AWS, designed to decouple your microservices-based or distributed applications. You can even use SQS to send, store, and receive messages between different applications at high volumes without any infrastructure management as well. SNS is a Simple Notification Service used primarily as a pub/ sub messaging service or as a notification service. You can additionally use SNS to trigger custom events for other AWS services, such as EC2, S3, and CloudWatch.

EMR: Elastic MapReduce is a managed Hadoop as a Service that provides a clustered platform on EC2 instances for running Apache Hadoop and Apache Spark frameworks. EMR is highly useful for crunching massive amounts of data as well as to transform and move large quantities of data from one AWS data source to another. EMR also provides a lot of flexibility and scalability to your workloads with the ability to resize your cluster depending on the amount of data being processed at a given point in time. It is also designed to integrate effortlessly with other AWS services, such as S3 for storing the data, CloudWatch for monitoring your cluster, CloudTrail to audit the requests made to your cluster, and so on.

Redshift: Redshift is a petabyte scale, managed data warehousing service in the cloud. Similar to its counterpart, EMR, Redshift also works on the concept of clustered EC2 instances on which you upload large datasets and run your analytical queries.

Data Pipeline: Data Pipeline is a managed service that provides end users with an ability to process and move datasets from one AWS service to another as well as from on-premise datastores into AWS storage services, such as RDS, S3, DynamoDB, and even EMR! You can schedule data migration jobs, track dependencies and errors, and even write and create preconditions and activities that define what actions Data Pipeline has to take against the data, such as run it through an EMR cluster, perform a SQL query over it, and so on.

IoT and Greengrass: AWS IoT and Greengrass are two really amazing services that are designed to collect and aggregate various device sensor data and stream that data into the AWS cloud for processing and analysis. AWS IoT provides a scalable and secure platform, using which you can connect billions of sensor devices to the cloud or other AWS services and leverage the same for gathering, processing, and analyzing the data without having to worry about the underlying infrastructure or scalability needs. Greengrass is an extension of the AWS IoT platform and essentially provides a mechanism that allows you to run and manage executions of data pre-processing jobs directly on the sensor devices

This has been a quick recap some of the key features and additions included in the core AWS services over the past few years. Remember, however, that this is in no way a complete list! There's a lot more to cover and learn. For more in-depth coverage, read AWS Administration - The Definitive Guide.

This tutorial is an excerpt from AWS Administration - The Definitive Guide by Yohan Wadia and published by Packt. Get the recommended ebook for just $10 (limited period offer).

About the Author

You May Also Like