Apples for Apples: Measuring the Cost of Public vs. Private Clouds

Before making a choice of where to place workloads, enterprises need a consistent way to compare public and private cloud costs.

October 9, 2023

If the analysts are correct, we're at or nearing an inflection point. The point at which more than half of Enterprise IT spend is made in the cloud.

It's no surprise, then, that an increasing focus among IT leaders is on both the absolute cost and the effectiveness of that cloud spend within their organizations. For some, this has been more than a challenge, with one of the very reasons that cloud has become so popular in the first place - the ability for engineers to swipe a credit card and spin up effectively limitless resources, enabling them to commit to elastic levels of spending, often under the radar of any central budgetary control. This has hampered many in their ability to both get a handle on exactly what and where these costs are coming from and also to plan effectively for a more strategic relationship with the hyperscalers.

Enter FinOps and the Cloud Centers of Excellence

A portmanteau of "Finance" and "DevOps," enter the relatively recent discipline of "FinOps." FinOps is a collection of best practices and tooling with the ultimate aim of optimizing the business value derived from spending on the cloud. Originally stemming from user group meetings for cloud cost optimization platform Cloudability (now part of IBM, after their recent purchase of Cloudability acquirer Apptio), the FinOps practice is now being moved forward by the independent FinOps Foundation, a project within the Linux Foundation.

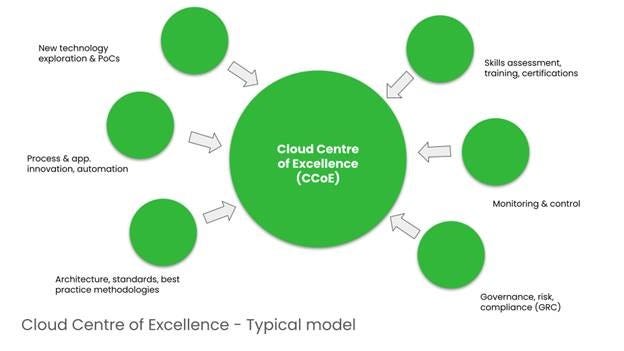

While FinOps might provide the cost optimization piece of the puzzle, in parallel, it has given rise to what are being termed Cloud Centers of Excellence (CCoEs), reportedly now operating at either a formal or informal level in the majority of larger organizations and in the process of being rapidly adopted elsewhere1. These CCoEs bring a strategic lens to the topic of cloud strategy, considering technical, financial, regulatory, and wider business considerations in the longer-term relationship of the organizations with the cloud providers the organization chooses to engage with.

FinOps-private-cloud.jpg

Comparing “apples to apples” needs FOCUS

Underpinning all of this at a very practical and tactical level is the ability to ascertain exactly what your organization is actually doing in each cloud provider, how much it costs, and why the costs are the way they are. At the same time, if the objective is not just to optimize spend and value within a single cloud provider and instead compare the relative benefits of moving to a different cloud provider, then there must be a consistent method for comparison.

It’s something, according to Forrester’s Tracy Woo, customers have been asking for from the hyperscalers for around a decade, but until very recently, these cries had not been met.

That’s the objective behind a recent open-source standards project called FOCUS: the FinOps Open Cost and Usage Specification. FOCUS is a Linux Foundation technical project sponsored by the FinOps Foundation, with the first official release slated for the end of 2023. This project will define a specification that allows cost and usage data to be produced using a common schema and using "FinOps-compatible" terminology. It should then be possible to easily unify relevant billing data across all providers and drive better business decisions based on the fully burdened cost of using the cloud.

But what about private cloud?

Well, for the moment, at least, this seems to be the catch.

As it appears to me, with FinOps as a whole today, the focus of FOCUS seems very much to be on solving the problem within the public cloud providers. It's not only a multi-cloud world out there, with almost all large organizations working with multiple public cloud vendors today, but more importantly, in the context of this topic, around three-quarters of these organizations also run private clouds, or some "Frankencloud” approximation to one.

The question then is clear. If the objective is to make a true "apples to apples" comparison to decide the optimal location for workloads to be placed, then that analysis is incomplete without considering fully the efficient utilization of assets likely already bought and paid for, consuming space, power, and cooling in your own data center or co-lo. Those assets are there, whether you utilize them at 20% or 80% of their capacity. The incremental cost of running workloads there, over and above the ones you already have to run on-prem., may be practically zero.

Andrew Moloney is the Chief Strategy Officer at SoftIron.

Related articles:

About the Author

You May Also Like