Running Containers At Scale – The Best Data Path to Success

Understanding the route that data takes within the system can reveal both the potential source of lower than desired performance and its solution.

January 14, 2022

Kubernetes and other containerization orchestration platforms are rapidly being incorporated into mainstream infrastructures. With most line-of-business applications, migrating from traditional data center technology to deployments based on containers is a discrete task and relatively straightforward. However, nothing accentuates the shortcomings of any storage approach more than scale - and when more demanding core applications like databases or fast data analytics workloads come into consideration, the matter becomes complicated.

Firstly, IT teams quickly realize that containerization imposes stricter requirements for the underlying infrastructure - including networking, storage, and fault tolerance. While Kubernetes (K8s) made a lot of progress in these areas, demanding applications remain subject to drop-offs in performance, both on-premises and in the cloud.

Secondly, the Kubernetes networking doesn't provide low and predictable latency even at medium scale for high performance applications ported to containerized environments.

Careful consideration of the required CPU, bandwidth, and storage capacity for a smooth-running IT system is always important for optimizing a deployment. However, understanding the route that data takes within the system can reveal both the potential source of lower than desired performance and its solution.

Three major approaches exist in providing storage for containerized workloads.

On-premises and co-located appliances/storage clusters. While on-premise storage is often the most feature-rich option and relatively straightforward to extend from existing infrastructure, cloud- and container-native deployments can be problematic. In these on-premises implementations, the storage lives in parallel to the Kubernetes system. K8s connects the application to the storage through a(container storage interface (CSI) plug in, which works by directly connecting the application containers to the external storage, completely bypassing the K8s-controlled network.

Container-only storage software. Solutions that were born as containers and implemented using them have the advantage of being purpose-built for containers. These products take the "feature-first" approach, which helps assure IT teams that functionality such as thin provisioning and deduping are preserved. However, performance, both at scale and in production, again hinges again on the data path. These solutions provide access to storage devices via storage controllers, themselves implemented as containers, so the whole data path passes through K8s networking, impacting latency.

Software-Defined Storage running natively in Kubernetes. Less than a dozen pure software-defined storage options exist on the market, and among them, only a handful run natively in Kubernetes. These include stand-alone bare metal software-defined storage offerings that were ported to work in Kubernetes and also support hybrid on-prem and cloud implementations.

Software-defined storage that’s native in Kubernetes takes the pluses of the above two approaches for optimal performance as well as scale. It is container-native, and depending upon the implementation, some isolate the data path from Kubernetes, so the performance is better than the CSPs in the container-only storage software approach.

This enables data center architects to obtain the best of traditional on-premise architectures and container-only storage. To ensure latency predictability, the data path is beneath Kubernetes - between containers and the NVMe SSDs - moving from the kernel to the client device driver, to the target drive, and then with direct access to the NVMe drives.

In this approach, clients are completely independent with no-cross client communication required and can communicate directly with targets. This reduces the number of network hops and the number of communication lines, making the pattern acceptable for a large-scale environment, where the number of connections is a small multiple of the domain size.

Elasticsearch application

Several use cases show the benefit of a software-defined approach that allows the system to run natively in Kubernetes. For example, a major telecom provider in EMEA trialed three storage approaches for an Elasticsearch in large Kubernetes. An external, iSCSI-based SDS was scalable, but the latency was in the millisecond range, resulting in much worse indexing performance, while a K8s-native storage solution couldn't keep up with the requirements of scale of hundreds of nodes. Both of these approaches resulted in a significantly worse experience for the end-users. The third approach, a scalable NVMe-based SDS used NVMe drives embedded into Kubernetes nodes, combined with native integration into K8s control and management planes, achieved significantly better performance and latency.

K8 kubernetes.png

System architecture for NVMe-native shared storage for Kubernetes with bare-metal performance.

CI/CD application

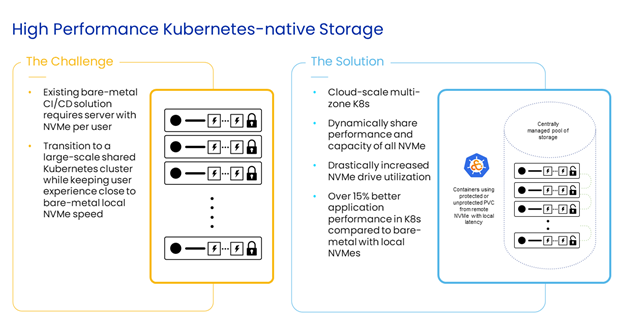

In another example, a tier-one Web firm ran an SDS natively in Kubernetes in a CI/CD application in a data center with tens of thousands of nodes to provide a robust control environment for compilation, building, and local testing. Figure 1 shows how the NVMe-based, client-side and scale-out architecture of the SDS enabled the transition of CI/CD workloads to K8s while preserving bare-metal performance.

When running under Kubernetes, the approach controls deployments of the client and target device drivers with privileged containers, leaving the data path unaffected by the containerized nature of the Kubernetes environment and moving all the control and management plane components to native container API-based operations. In the Tier-1 web firm's production environment, application-level performance is 15%-20% higher than in the bare-metal case, as the storage software aggregates several remote NVMe drives in a virtual volume presented to user containers running applications.

The (data) path to success

Finding the right storage to fit application needs for scalability and performance is not a one-size-fits-all endeavor. When storage architects choose storage for containers by understanding the implications of the data path, they can enable fluidity in the hybrid deployment of containerized applications. Teams then can obtain the scalable, high-performance, agile storage they sought in the first place.

Kirill Shoikhet is CTO of Excelero Storage.

About the Author

You May Also Like