Data Center Networking

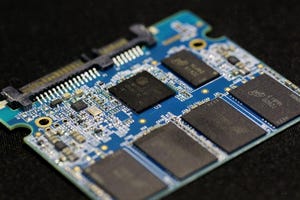

Data center networking is the networking infrastructure and technologies used to interconnect servers, storage systems, and other networking devices within a data center facility.

Supercharging the Smart City with AI-Enhanced Edge Computing

Sponsored Content

Supercharging the Smart City with AI-Enhanced Edge ComputingSupercharging the Smart City with AI-Enhanced Edge Computing

The integration of AI-enhanced edge computing in smart cities revolutionizes urban management, optimizing resource allocation, enhancing security, promoting sustainability, and fostering citizen engagement. That ultimately leads to a higher quality of life for residents.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox