Your Network's Next Step: Cisco ACI Or VMware NSX

When it comes to SDN, Cisco's Application Centric Infrastructure and VMware's NSX are often discussed interchangeably, but they are really very different. Joe Onisick explains how they compare and can even work together.

January 14, 2015

Editor's Note: This article is written by Joe Onisick, an engineer at Cisco who helped develop and works closely with ACI technology. While we recognize that the column may have inherent biases, Joe is known as an authority on this subject, and we feel that he addresses points that are important to our readers. Other subject matter experts interested in contributing technical articles may contact the editors.

With the industry buzzing about software-defined networking (SDN), there are two products that often lead the discussion: Cisco's Application Centric Infrastructure (ACI) and VMware's NSX. Many organizations are in the midst of assessing which solution is correct for them and their networks moving forward. In this column we'll take a look at some of the factors that go into deciding which product is appropriate and even when they should be used together.

To get a baseline of both technologies, we'll start with a quick overview of each offering.

VMware NSX

VMware NSX is a hypervisor networking solution designed to manage, automate, and provide basic Layer 4-7 services to virtual machine traffic. NSX is capable of providing switching, routing, and basic load-balancer and firewall services to data moving between virtual machines from within the hypervisor. For non-virtual machine traffic (handled by more than 70% of data center servers), NSX requires traffic to be sent into the virtual environment. While NSX is often classified as an SDN solution, that is really not the case.

SDN is defined as providing the ability to manage the forwarding of frames/packets and apply policy; to perform this at scale in a dynamic fashion; and to be programmed. This means that an SDN solution must be able to forward frames. Because NSX has no hardware switching components, it is not capable of moving frames or packets between hosts, or between virtual machines and other physical resources. In my view, this places VMware NSX into the Network Functions Virtualization (NFV) category. NSX virtualizes switching and routing functions, with basic load-balancer and firewall functions.

Two versions of NSX exist, depending on a customer's infrastructure requirements. There is the more feature-heavy NSX for VMware, which works only with VMware hypervisors and automation tools, or NSX Multi-Hypervisor, which has limited support for some Linux hypervisors, but requires a VMware distribution of OVS that is split off from the open community trunk. According to VMware, NSX-MH is currently being phased out.

NSX's strongest selling point is security isolation within a hypervisor. This falls into the category of "micro-segmentation." NSX is able to deploy routing and basic load-balancing and firewall services between virtual machines on the same hypervisor. Again, this is NFV functionality, not SDN functionality, which would require ability to forward packets between devices.

Cisco ACI

Cisco ACI is designed to look at both the change in hardware requirements and the agility provided by software control of networks as a system, rather than manual configuration of individual devices.

From a hardware perspective, two major changes are driving the need for network refresh:

The move from 1G to 10G is being driven by current processor capabilities and 10G LAN on motherboard (LOM) shipping with new servers. This then drives requirements for 40G/100G ports for traffic aggregation and forwarding distribution across access or leaf layer switches.

Changes in data center traffic patterns are driving the requirement for a shift in network topology design. Modern data centers move the majority of data in an East-West pattern, which means server-to-server communication. Three-tier network architectures are designed for traditional North-South traffic patterns, which supported legacy application architectures.

These changes require physical hardware refresh as well as topology change within the data center, and cannot be solved with software-only solutions. The requirement for these changes is reflected within the best practices guides of even software-only solutions such as VMware NSX. The best practices guides for these products suggest 10G/40G non-blocking, 2-tier, spine-leaf designs. These are the same designs recommended by network vendors such as Cisco and utilized by ACI.

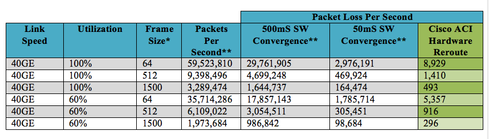

The Cisco ACI approach blends software with hardware to allow capabilities to be built into one or the other, where they will offer the most value. For example, hardware can be built to reroute traffic in 150ns or less in failure scenarios, while software routing reconvergence averages 50ms or more. This is an order of magnitude difference from a packet loss perspective. To better understand this, look at the following examples of packet loss software and hardware failover options.

ACI is a centrally provisioned "zero-touch" fabric, which is deployed and operated via a central controller cluster. ACI uses the language of applications and their business requirements to automatically provision the underlying virtual and physical networking infrastructure, including software and hardware Layer 4-7 appliances. ACI provides a comprehensive networking, security, and compliance automation system for all aspects of application delivery, including forwarding, security, and user experience.

Choosing Cisco ACI or VMware NSX

Starting with assessing NSX, the first step is to understand that with or without NSX, a physical network will be required to actually move packets between devices. In addition to this, modern data centers are only about 70% virtualized from a workload perspective. Legacy applications still exist on bare metal, while modern applications are written to utilize bare metal without the cost and overhead of a hypervisor. This means that you'll want to ensure you're able to provide network services and security to all existing workloads, without artificially choking traffic through hypervisors or server-based gateway devices.

Because NSX provides no management or visibility into the physical network that the NSX application utilizes, you'll want to ensure that any network chosen for NSX provides native automation for provisioning and network change, as well as advanced visibility and telemetry tools for troubleshooting and day-two operations. These tools should not be limited to simple databases for mapping virtual tunnels to VLANs, but offer more comprehensive visibility and automation, including firmware and patch management. Without this, your network will become more complex with two management and monitoring systems for performance and failure troubleshooting.

You'll next want to look at the hypervisors you're using, or could potentially use moving forward. More than half of data centers use multiple hypervisors, so you'll want to take this into account. When choosing NSX, you must choose between the VMware-only NSX for vSphere, or the multi-hypervisor version, VMware NSX-MH, which uses a VMware-proprietary distribution of Open vSwitch (OVS). Additionally, at the time of this writing, NSX-MH is being phased out. The two products are not compatible, and the features vary greatly between the two. Additionally, you'll want to consider licensing costs and future licensing model changes that may occur.

You'll next want to consider what the business objective/requirement is for NSX. Are you looking for an SDN solution to speed up deployment of new applications and services, the ability to move to cloud computing models, or the ability to move to agile software deployment models? If so, NSX may not be the right tool, because it focuses on only the VM-to-VM traffic pattern, with no ability to move traffic between VMs on different physical devices.

Security capabilities

If your goal is to tighten security and move beyond legacy perimeter-based defense postures, then NSX may be a solid choice for virtual traffic. This is especially true if you've chosen to standardize 100% on the VMware hypervisor and are (or intend to be) a 100% VMware-based virtualization environment. In this case, NSX offers segmentation abilities within the hypervisor by providing basic semi-stateful firewall capabilities within the virtual environment.

It's important to remember that even in this second scenario you'll need to have a separate set of security tools for physical environments. Even 100% VMware virtualized environments need security for vMotion, hypervisor management, IP storage ports, NSX management, gateway servers, and BUM (broadcast, unknown unicast, and multicast) appliances that are run as physical servers, etc. You’ll also still utilize perimeter security, typically consisting of physical appliances that do not tie into NSX solutions.

ACI, however, can tie both physical and virtual environments together and treat them equally from a connectivity, security, user experience, and auditing perspective. ACI's blend of hardware and software components allows you to address the data center network as a whole with a complete solution, rather than stack a house of cards using disparate components, each handling some small portion of total requirements.

Cost considerations

The major consideration when deploying ACI is the insertion strategy into existing networks. Greenfield deployments are rare, and the average network has existing equipment still very capable of doing its job, and receiving support. Because ACI utilizes a combination of hardware and software there is a misconception that it requires a "forklift upgrade" of existing network hardware.

In reality, ACI has a very low entry point from a hardware, software, and cost perspective. This entry typically includes two low-cost, high-performance spine switches (32 ports of line-rate 40G each), which provide connectivity between leaf switches for interconnectivity, as well as other ACI functions. It also includes two leaf switches (48 ports of line-rate 1/10G with 12 port of 40G uplink) for connectivity of servers, L4-7 services appliances, external network, WAN, etc. Lastly, the Application Policy Infrastructure Controller (APIC) cluster, and all required licensing would complete the entry point.

This is all packaged as a starter kit bundle, which is priced at about the same as the server purchase requirement would be for the control system of a software-only solution such as NSX. For example, the current pricing on an ACI starter kit is below $125,000 average street price (ASP). Compare this to the various x86 hosts required to manage, monitor, and provide gateway functions for NSX, along with the required host and feature licensing.

Because ACI is compatible with all modern data center network standards such as 802.1Q, BGP, OSPF, etc. this entry level ACI option can be integrated with switching systems from any vendor based on standard protocols. Even when integrated with existing environments, ACI provides the same automation, application visibility, security segmentation, and service automation capabilities it would in a greenfield deployment.

Better together?

Since ACI and NSX aren't really in competition -- one an SDN solution, one an NFV solution -- might I want both? The answer is yes; in some cases implementing both may be useful. ACI is arguably the most robust and automated network transport solution, while NSX offers hypervisor-based load-balancing and firewalls, especially when paired with third-party VMware partners for more than basic functionality. Because of that, VMware NSX's suite of NFV tools can be complementary to Cisco ACI's comprehensive SDN architecture.

NSX at its heart is simply another virtualized application running within the hypervisor. ACI is a network focused on the individual requirements of an application. When using the two together, VMware NSX would simply be segregated as another application within the ACI policy model. ACI would then cover the scope of automation, security, auditing, and micro-segmentation that NSX does not handle -- the bare-metal servers, physical appliances, Linux containers, the VMware kernel and vMotion ports, etc.

In this model, NSX is providing micro-segmentation for VM-to-VM traffic, while ACI utilizes NSX as a piece of a holistic security stance that encompasses today's real-world workload mix. Additionally, ACI alleviates the need for gateway servers by providing gateway, bridging, and routing functions for untagged, VLAN, VxLAN, and NVGRE on any port. This means that the 50%+ customers using multiple hypervisors can easily connect heterogeneous virtualization solutions seamlessly without additional hardware.

As with any major technology shift and product decision, it's important to gain a strong understanding of what the products offer, and what the business goals are for technological shift. It's best not to look at ACI and NSX as competing solutions, because they truly aren't. If your business requires a dynamically provisioned, scalable and programmable network, ACI is the leading choice. If your business requires hypervisor-level micro-segmentation for VM-to-VM traffic, NSX is a solid choice. If both are required by the business, the two can work together to meet those requirements.