Scaling Servers: A Cost Breakdown

Software costs add up quickly in today's virtualized datacenters. Does it make more sense to buy a few big servers or a bunch of little ones? I compare the costs.

December 23, 2014

It’s long been true that the software running on most servers costs more than the hardware applications. After all, Exchange and SAP are expensive. As I was figuring out the relative cost of a hyperconverged solution it became painfully obvious that most of the value was software. Even without the software-defined software stack, the base software load on a virtualization host in today’s datacenter is several times the cost of the hardware. Since the software is priced per server or per processor, the economics are forcing us into fewer big servers.

Assuming you, like most in the corporate world, are running Windows virtual machines on top of vSphere Enterprise Edition, your servers will have more than $20,000 worth of software on them long before you even get to the applications. Does running a cluster of 20 $6,000 servers, each with $20,000 in system software make sense? Let's take a look.

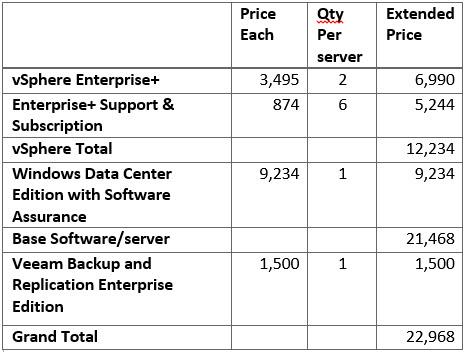

That $20,000 breaks down to:

Figure 1:

Like most of the datacenter managers I know would do, I included the datacenter edition of Windows, since that covers Windows Server licensing for as many virtual machines as I decide to run on this host. Having learned my lesson from my last pricing analysis, I made sure to include three years of support for vSphere and Windows software assurance.

I added Veeam Backup and Replication, partially because everyone needs some backup software, and Veeam can stand in as a proxy for NetBackup or Simpana, and partially to indicate that even after hypervisors and Windows are covered, there’s always management software to boost the price.

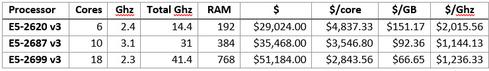

I then went to the Dell site -- not because I’m particularly fond of Dell servers, but because the Dell site makes it easy to price out different configurations -- and priced out an R730 in three configurations:

1. Dual E5-2620 v3 processors and 192GB of RAM -- basically the same as the EVO:RAIL server configuration, but with the latest generation of Xeons. This configuration priced out at $6,056.

2. Dual E5-2687 v3 processors and 384 GB of memory. This configuration gives the server the maximum number of 3 GHz or faster cores, making it a sweet spot for servers that need speed for individual threads. This configuration priced out at $12,500.

3. The maxed-out server with 2699 processors and 786 GB of memory gives us 36 2.3GHz cores to run many VMs like a VDI host. This configuration priced out at $28,216.

Even though all are R730s, the biggest config has roughly four times the compute power and memory of the smallest and costs about four times as much. The decision between a bunch of small servers or a handful of bigger ones could be based on physical considerations, like how much datacenter space they’d take up and how much power they consume.

Add in the software costs and things start looking a bit different:

Figure 2:

The true landed cost of the server that’s four times as powerful is now less than twice the cost of the little guy. Economically, it makes a lot more sense to buy a few big servers rather than a pile of little ones.

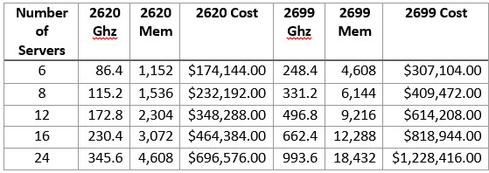

Then I shifted my focus from clear economics that dictate a few big servers to operational considerations. Assuming I have enough workloads to support it, I want to keep my cluster size between six and twenty-four servers. With at least six servers in a cluster, there are enough resources that having a server go offline, for maintenance or when the dreaded purple screen of death appears, won’t cripple the cluster. Limiting the initial size of a cluster to 24 nodes lets me add to the cluster -- up to vSphere’s maximum of 32 nodes -- in the event I underestimated how much compute I need or how much demand grows over time.

The table below shows the compute power and cost of clusters with the small and large server configurations:

Figure 3:

How do you choose your server configurations? Let us know in the comments section below.