Cloud Networking

Cloud networking is the use of cloud computing technologies and services to optimize and manage network infrastructure and resources.

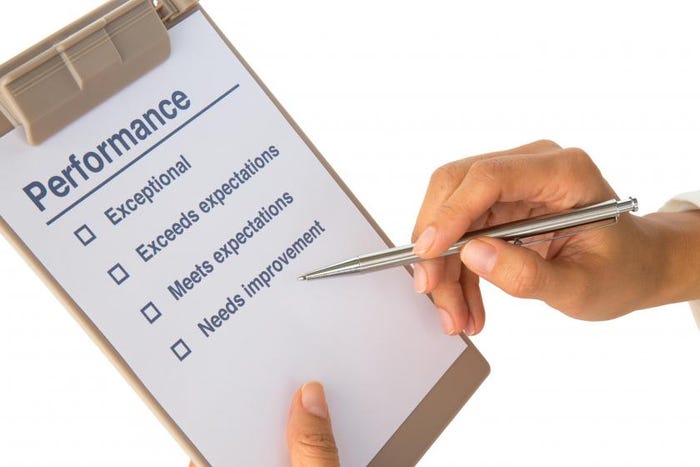

Avoid Buyer's Regret: Top Tips for Assessing Infrastructure Provider Health Before Purchases and Contract Extensions

Cloud Networking

Avoid Buyer's Regret: Top Tips for Assessing Infrastructure Provider Health Before Purchases and Contract ExtensionsAvoid Buyer's Regret: Top Tips for Assessing Infrastructure Provider Health Before Purchases and Contract Extensions

Dodge remorse, costly setbacks, and do-overs on crucial purchases such as SASE, SD-WAN, wireless, and cloud services to help transform your business.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox