Reducing Network Overhead with Compute Express Link (CXL)

CXL improves the performance and efficiency of computing and decreases TCO by streamlining and enhancing low-latency networking and memory coherency.

February 3, 2023

Compute Express Link (CXL) is an open interconnect standard for enabling efficient, coherent memory accesses between a host, such as a processor, and a device, such as a hardware accelerator or Smart NIC.

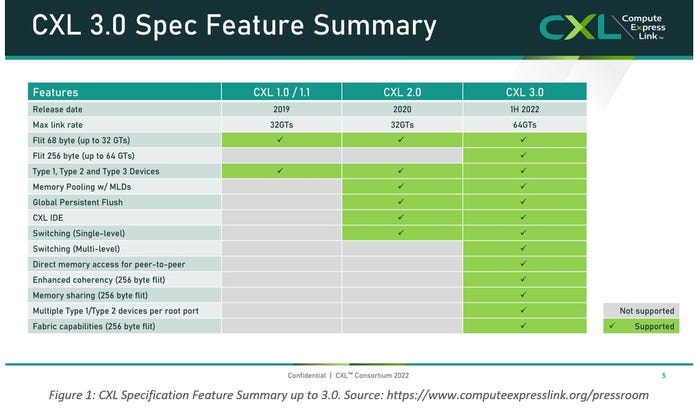

The CXL consortium, consisting of over 200 leading companies, has released three major versions of the specification. Each version adds new features and functionality shown in Figure 1. The benefits to this are that the performance and efficiency of computing will be significantly increased while TCO is decreased by streamlining and enhancing low-latency networking and memory coherency.

cxl specs.jpg

The CXL standard defines three multiplexed protocols that utilize PCIe 5.0 Link and Transport Layers.CXL.io: is the foundational communication protocol and is functionally equivalent to the PCIe 5.0 protocol.

CXL.cache: enables accelerators to efficiently access and cache host memory for optimized performance.

CXL.memory: enables a host, such as a processor, to access device-attached memory using load/store commands.

Using combinations of the protocols, the CXL Consortium has defined three primary device types:

Type 1 Devices: Accelerators such as Smart NICs typically lack local memory. CXL Smart NICs will leverage the CXL.io protocol and CXL.cache to directly access the main memory. CXL 3.0 defines peer-to-peer functionality that allows CXL devices to directly access memory in other CXL devices on the flex bus without requiring the host processor.

Type 2 Devices: GPUs, ASICs, and FPGAs use embedded DDR or HBM memory and can use the CXL.io, CXL.cache, and CXL.memory protocols to make the host processor’s memory locally available to the accelerator. Conversely, the accelerator’s memory is directly available to the CPU.

Type 3 Devices: The CXL.io and CXL.memory protocols can be leveraged for memory expansion, pooling, and sharing. These devices enable main memory capacity expansion and add additional memory bandwidth.

Availability of CXL Devices

CPUs supporting CXL 1.1 and 2.0 will be generally available in 2023, with future products supporting newer versions of the CXL specification. CXL hardware vendors have announced CXL 1.1, 2.0, and 3.0 products. One significant benefit of using PCIe over a new custom bus and interconnect is the forward and backward compatibility of devices. For example, connecting a CXL 3.0 device to a 1.1-compatible host will allow the host and device to work, though functionality will be restricted to the lowest common denominator.

A Fully Software-Defined Data Center

Networking and Storage have successfully traversed the disaggregation path over many decades. CXL allows memory and accelerators to become disaggregated, allowing data centers to be fully software-defined for the first time. This evolutionary step in heterogeneous architectures solves many problems, including addressing the computational and memory needs of increasing data sets driven, in part, by AI/ML, HPC, and In-Memory Databases.

Leveraging memory pools, servers are no longer constrained by locally attached DRAM. Memory expansion over CXL adds bandwidth and capacity for applications, solving the stranded memory problem that hyperscalers face by over-provisioning DRAM in servers that aren't rented. Frigid Memory is memory that is rented but isn’t used by applications. Google Cloud reports up to 40% of its memory is stranded; Azure reported 25% is stranded and 50% of rented memory is frigid. Disaggregation achieves up to a 10% reduction in overall DRAM, representing hundreds of millions of dollars in cost savings.

Emerging Solutions and Data Services

New software components are required to manage the devices and fabric alongside operating system support for CXL. Software and data services are being developed to close the CXL specification generational gaps to ensure coherency and functionality until future processors and platforms enter the market with the features.

Some memory devices in a pool can allow memory to be shared across many hosts, which opens new opportunities and possibilities for applications to read, modify, and write data in place without having to move data or pass messages between nodes over the network.

Dynamic capacity devices allow their capacity to be mapped and used by multiple hosts, reducing TCO and improving infrastructure resource utilization.

Eventually, applications, schedulers, and orchestrators will evolve to request resources on demand during runtime and release them back to the pool when the task(s) are complete. Emerging software solutions will perform these operations and other functions for unmodified applications, allowing transparent use of CXL hardware.

Additionally, current and emerging memory-centric solutions include:

Transparent memory tiering allows unmodified applications to utilize and benefit from the memory capacity and additional bandwidth available to the host(s) while addressing the high latency problem.

Checkpointing that pause processing and spending time writing to disk can be performed in memory, at memory speeds, using in-memory snapshots.

Snapshots can provide fast application recovery from crashes or allow applications to run to completion in cloud spot instances, saving up to 80% of cloud expenditure.

Serialized pipelined workloads, such as those in HPC and Bioscience, can be parallelized using snapshots and clones.

Big memory heterogeneous systems will significantly reduce network and disk IO demand, but most importantly, time to insight.

Devices and fabrics will provide security to meet requirements.

Compression will provide additional TCO value for devices that store colder data for applications that can tolerate higher latency accesses.

Steve Scargall is a Senior Product Manager and Software Architect at MemVerge.

Related articles:

About the Author

You May Also Like