Complex Cloud Architecture Types

This excerpt from Architecting Cloud Computing Solutions explains the various types of complex cloud architectures, including multi-data center architecture, global load balancing, database applications, and multi-cloud.

September 24, 2018

Don't miss the other sections of this excerpt:

Guide to Cloud Computing Architectures

Hybrid Cloud Architecture Concepts

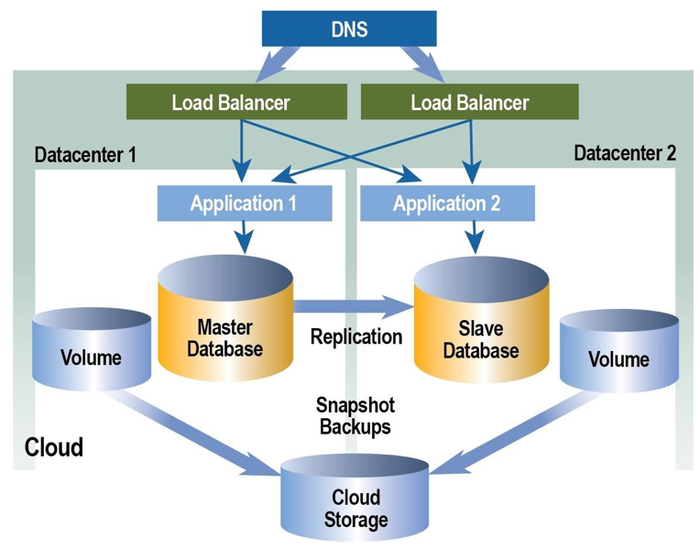

Multi-data center architecture

Redundant single site designs can often handle many of the more prevalent issues that cause downtime within infrastructure layers. What happens if the entire site is not reachable? What happens if something gets misconfigured in DNS that sends traffic the wrong direction? The single site is now unreachable. Traditionally, the answer to this challenge has been very expensive. The redundant single site design nearly doubled the cost of infrastructure. For geographic redundancy, a second site is needed. This second site effectively doubles the budget of the first site, which already doubled when redundancy was added to the first design.

Cloud solutions are dramatically changing the way we design redundancy, resiliency, and disaster recovery. The cloud changes the fundamentals of base designs. For example, we are now able to design for low-side, base-level traffic flows, instead of designing for anticipated high-watermark levels. The cloud enables dramatic changes in footprint size and the amount of redundant infrastructure needed in single site and multiple site designs. The cloud is also changing the consumption patterns for infrastructure. Some applications traditionally deployed in-house have transformed to SaaS offerings, eliminating the need for the associated in-house infrastructure. The reduction in single site footprints can also reduce the size of second site footprints, helping redundant strategies fit into budgets easier than traditional deployments.

When planning redundancy across multiple data centers, new design challenges need consideration. How is traffic sent to one location or the other? Is one site active and one backup? Are both active? How does fail-back to the primary get handled after the failure occurs? What changes in resiliency plans are needed? How is data synchronization handled before and after failover?

Global server load balancing

There are many mechanisms to handle the flow of traffic between multiple sites. Nearly all of them rely on the manipulation of DNS information. DNS information can sometimes take hours to update across the globe. If production sites must failover to redundant sites, waiting hours for traffic to pass again is not an option. Global server load balancing enabled the configuration of pre-planned actions to take place in the event of failure. GSLB required expensive publicly accessible devices at each site. Security experts were also required as part of a successful solution to keep devices safe from continuous hacking attempts.

Expensive, traditional, device-based GSLB deployments can be deployed as cloud GSLB services, where GSLB is consumed as a managed service for a monthly fee. Providers are also offering additional options including regional deployments and separated availability zones to help handle geographic diversity and failover. It is up to the consumer to decide the level of redundancy and speed of failover required. Zone level redundancy is different than regional deployments.

C-cloud-global-load-balancing1.png

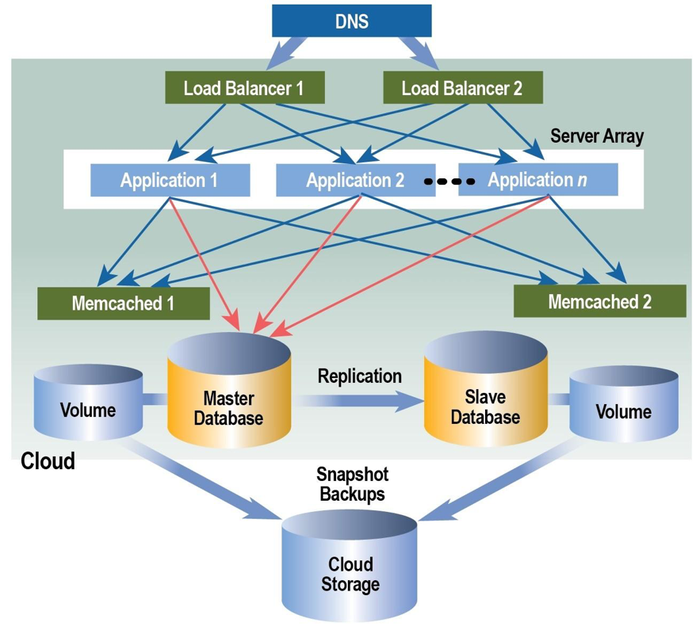

Database resiliency

Primary-secondary or master-slave database relationships are common, but have some challenges when failures occur in high-transaction, heavy traffic environments. Databases are taking lots of requests and transactions are being written and read continuously. Backup processes can be taxing and time-consuming. Restoration and synchronization can take significant time. Heavy demand environments can benefit from an active-active database configuration with bi-direction replication to keep data synchronized on both database servers. This type of design does add more complexity but also adds greater levels of redundancy and resiliency within a single site, or across multiple sites, depending on configuration.

C-cloud-database-resiliency2.png

Caching and databases

Content types can also affect architectures. As an example, caching techniques can change the load on database servers, load balancing design, database server sizing, storage type, storage speed, how storage is handled and replicated, as well as network connectivity, and bandwidth requirements. Current estimates place 80%-90% of enterprise data in unstructured categories.

C-cloud-database-caching3.png

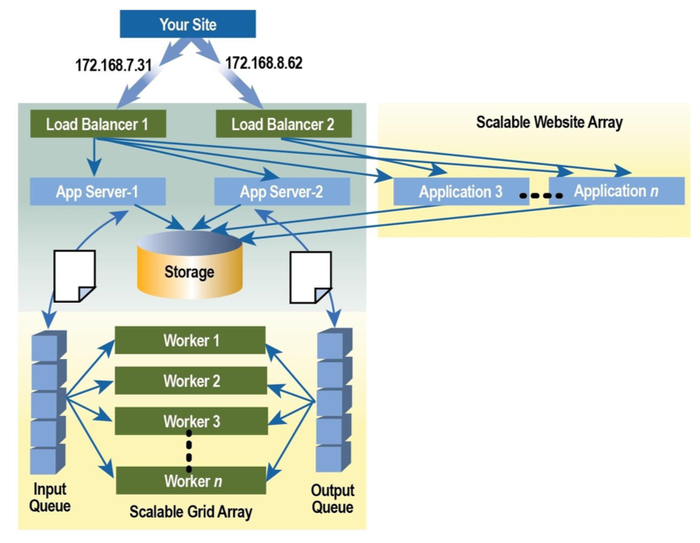

Alert-based and queue-based scalable setup

Since multiple server arrays can be attached to the same deployment, a dual scalable architecture can be implemented. This delivers a scalable front-end and back-end server website array.

C-cloud-alert-and-queue-based4.png

Hybrid cloud site architectures

A hybrid cloud site architecture can protect your application or site redundancy by leveraging multiple public/private cloud infrastructures or dedicated hosted servers. This will require data and infrastructure portability between selected service providers. A hybrid approach requires an ability to launch identically functioning servers into multiple public/private clouds. This architecture can be used to avoid cloud service provider lock-in. It is also used to take advantage of multiple cloud resource pools. The hybrid approach can be used in both hybrid cloud and hybrid IT situations.

Scalable multi-cloud architecture

A multi-cloud architecture, offers the flexibility of primarily hosting an application in a private cloud infrastructure, with the ability to cloudburst into a public cloud for additional capacity as necessary.

C-cloud-multi-cloud-scalable5.png

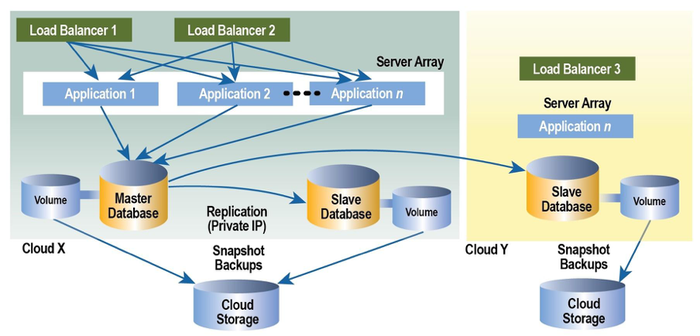

Failover multi-cloud architecture

A second cloud service provider could be used to provide business continuity for a primary cloud provider if the same server templates and scripts could be used to configure and launch resources into either provider. Factors to be considered when using this option include public versus private IP addresses and provider service level agreements. If there is a problem or failure requires switching clouds, a multi-cloud architecture would make this a relatively easy migration.

C-cloud-failover-multi-cloud6.png

Sending and receiving data securely between servers on two different cloud service provider platforms can be done using a VPN wrapped around the public IP address. In this approach, any data transmitted between the various cloud infrastructures (except if used between private clouds) is sent over the public IP. In the following diagram, two different clouds are connected using an encrypted VPN:

C-cloud-encrypted-VPN7.png

Cloud and dedicated hosting architecture

Hybrid cloud solutions can use public and private cloud resources as a supplement for internal or external data center servers. This can be used to comply with data physical location requirements. If the database cannot be transitioned to a cloud computing platform, other application tiers may not have the same restrictions. In these situations, hybrid architecture can use a virtual private network (VPN) to implement an encrypted tunnel across a public IP between cloud and dedicated servers.

C-cloud-dedicated-hosting8.png

This tutorial is an excerpt from Architecting Cloud Computing Solutions by Kevin L. Jackson and Scott Goessling and published by Packt. Get the recommended ebook for just $10 (limited period offer).

About the Author

You May Also Like