In the previous two blog posts in this series, I talked about system administrators losing sight of the data in data centers architectures and focusing only on compute horsepower. With the advent of cloud and big data analytics, this has changed with a renewed focus on data.

In this data-centric data center architecture, the compute element is no longer at the center of the server, but rather becomes a modular peripheral just like any other device. Furthermore, in this design, the server itself is no longer the unit of optimization, but rather rack and even data center optimization becomes the focus.

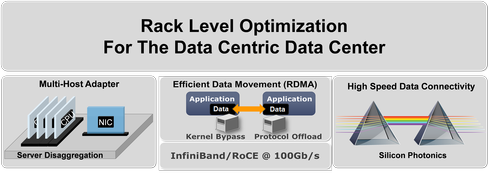

This new data center architecture puts tremendous pressure on the characteristics and capabilities of the network. In fact, the networking element becomes critical, requiring:

1. Disaggregation of server resources

- Flexible multi-host shared NIC

- Allows sharing of CPU, storage, memory, and I/O resources

- Flexibility to scale each element independently

- Reduced cost, power, and area

2. Efficient data movement

- Network virtualization, flow steering, & acceleration of overlay networks

- RDMA for server and storage data transport

3. High-speed data connectivity

- Cost-effective server and storage physical connectivity

- Copper connectivity within the rack

- Standards-based silicon photonics enabling long-reach data connectivity

Performing data-centric, rack-level optimization results in an architecture that provides four major benefits:

- Flexibility: Mix and match computational GPU and CPU types as needed

- Ability to upgrade to newer compute generations without a forklift server upgrade

- Network efficiency: Sophisticated RDMA transport and physical network becomes a shared resource

- Capex and opex reductions: Fewer NICs, connectors and cables and lower power reduces both upfront and operating costs

Facebook Yosemite

A good example of a platform that illustrates these concepts is the new multi-host Yosemite introduced by Facebook in March at the Open Compute Project (OCP) Summit in San Jose. This multi-host architecture allows a single network resource to support multiple processing resources with complete software transparency. Yosemite connects four CPUs to a shared multi-host NIC capable of running at 100 Gbps Ethernet links over a standard QSFP connector and cables.

Facebook's Yosemite platform includes all the core requirements of a data-centric data center architecture, including a network able to provide data efficiently to the individual processing units. This new rack-level architecture with modular computing elements provides the flexibility to mix and match processor types to align with application requirements.

New platform architectures such as Yosemite enable the evolution of this new data-centric data center, but are not by themselves enough. In addition, new ways of processing the data within nodes and efficiently moving data between nodes is required. This is required as the onslaught of data is not only demanding changes in rack architecture and networking technology, but also how data is processed.

Instead of a one-size-fits-all processor, the variations in the types of data processing performed means that different types of processors become optimal depending on the task. So for advanced machine learning and image recognition tasks, a massively parallel GPU (graphics processing unit) might be ideal. Other more conventional workloads might call for a high power X86 or OpenPower CPU. For other web and application processing tasks, power- and cost-efficient ARM-based processors may fit the bill.

Finally, very high-speed data connectivity is required based on industry standard form factors that can leverage existing installed fiber plant and span large distances to cost-effectively connect all of the elements within a disaggregated data center. The OpenOptics silicon photonic wavelength division multiplexing (WDM) specification, contributed to the OCP in March, provides the answer -- an open, multi-vendor, cost effective means to connect rack-level components. The OpenOptics specification leverages advanced silicon photonics technology capable of scaling to terabits per second of throughput carried on a single fiber and spanning distances up to 2Km.

Adapting to change

The ever increasing number of users, applications, and devices requires a new data-centric architecture capable of moving and processing vast amounts of data quickly and efficiently. Businesses that don’t understand the changes required and continue with a compute-centric approach will find themselves spending more money on compute hardware, but never able to keep up with the flood of data.

On the other hand, businesses that understand and embrace the efficient networking technologies this new data-centric data center architecture requires will find themselves with a competitive advantage -- able to process and analyze more data in real time and convert to actionable business intelligence. These businesses will thrive at the expense of those who are unable or unwilling to change.

.png)